The “1 trillion” never existed in the first place. It was all hype by a bunch of Tech-Bros, huffing each other’s farts.

Good. LLM AIs are overhyped, overused garbage. If China putting one out is what it takes to hack the legs out from under its proliferation, then I’ll take it.

Overhyped? Sure, absolutely.

Overused garbage? That’s incredibly hyperbolic. That’s like saying the calculator is garbage. The small company where I work as a software developer has already saved countless man hours by utilising LLMs as tools, which is all they are if you take away the hype; a tool to help skilled individuals work more efficiently. Not to replace skilled individuals entirely, as Sam Dead eyes Altman would have you believe.

LLMs as tools,

Yes, in the same way that buying a CD from the store, ripping to your hard drive, and returning the CD is a tool.

Cutting the cost by 97% will do the opposite of hampering proliferation.

What DeepSeek has done is to eliminate the threat of “exclusive” AI tools - ones that only a handful of mega-corps can dictate terms of use for.

Now you can have a Wikipedia-style AI (or a Wookiepedia AI, for that matter) that’s divorced from the C-levels looking to monopolize sectors of the service economy.

It’s been known for months that they were living on borrowed time: Google “We Have No Moat, And Neither Does OpenAI” Leaked Internal Google Document Claims Open Source AI Will Outcompete Google and OpenAI

It’s not about hampering proliferation, it’s about breaking the hype bubble. Some of the western AI companies have been pitching to have hundreds of billions in federal dollars devoted to investing in new giant AI models and the gigawatts of power needed to run them. They’ve been pitching a Manhattan Project scale infrastructure build out to facilitate AI, all in the name of national security.

You can only justify that kind of federal intervention if it’s clear there’s no other way. And this story here shows that the existing AI models aren’t operating anywhere near where they could be in terms of efficiency. Before we pour hundreds of billions into giant data center and energy generation, it would behoove us to first extract all the gains we can from increased model efficiency. The big players like OpenAI haven’t even been pushing efficiency hard. They’ve just been vacuuming up ever greater amounts of money to solve the problem the big and stupid way - just build really huge data centers running big inefficient models.

No but it would be nice if it would turn back in the tool it was. When it was called machine learning like it was for the last decade before the bubble started.

Possibly, but in my view, this will simply accelerate our progress towards the “bust” part of the existing boom-bust cycle that we’ve come to expect with new technologies.

They show up, get overhyped, loads of money is invested, eventually the cost craters and the availability becomes widespread, suddenly it doesn’t look new and shiny to investors since everyone can use it for extremely cheap, so the overvalued companies lose that valuation, the companies using it solely for pleasing investors drop it since it’s no longer useful, and primarily just the implementations that actually improved the products stick around due to user pressure rather than investor pressure.

Obviously this isn’t a perfect description of how everything in the work will always play out in every circumstance every time, but I hope it gets the general point across.

One of those rare lucid moments by the stock market? Is this the market correction that everyone knew was coming, or is some famous techbro going to technobabble some more about AI overlords and they return to their fantasy values?

Most rational market: Sell off NVIDIA stock after Chinese company trains a model on NVIDIA cards.

Anyways NVIDIA still up 1900% since 2020 …

how fragile is this tower?

It’s quite lucid. The new thing uses a fraction of compute compared to the old thing for the same results, so Nvidia cards for example are going to be in way less demand. That being said Nvidia stock was way too high surfing on the AI hype for the last like 2 years, and despite it plunging it’s not even back to normal.

How is the “fraction of compute” being verified? Is the model available for independent analysis?

Its freely availible with a permissive license, but I dont think that that claim has been verified yet.

And the data is not available. Knowing the weights of a model doesn’t really tell us much about its training costs.

My understanding is it’s just an LLM (not multimodal) and the train time/cost looks the same for most of these.

- DeepSeek ~$6million https://www.theregister.com/2025/01/26/deepseek_r1_ai_cot/?td=rt-3a

- Llama 2 estimated ~$4-5 million https://www.visualcapitalist.com/training-costs-of-ai-models-over-time/

I feel like the world’s gone crazy, but OpenAI (and others) is pursing more complex model designs with multimodal. Those are going to be more expensive due to image/video/audio processing. Unless I’m missing something that would probably account for the cost difference in current vs previous iterations.

One of the things you’re missing is the same techniques are applicable to multimodality. They’ve already released a multimodal model: https://seekingalpha.com/news/4398945-deepseek-releases-open-source-ai-multimodal-model-janus-pro-7b

The thing is that R1 is being compared to gpt4 or in some cases gpt4o. That model cost OpenAI something like $80M to train, so saying it has roughly equivalent performance for an order of magnitude less cost is not for nothing. DeepSeek also says the model is much cheaper to run for inferencing as well, though I can’t find any figures on that.

My main point is that gpt4o and other models it’s being compared to are multimodal, R1 is only a LLM from what I can find.

Something trained on audio/pictures/videos/text is probably going to cost more than just text.

But maybe I’m missing something.

Yea except DeepSeek released a combined Multimodal/generation model that has similar performance to contemporaries and a similar level of reduced training cost ~20 hours ago:

Holy smoke balls. I wonder what else they have ready to release over the next few weeks. They might have a whole suite of things just waiting to strategically deploy

The original gpt4 is just an LLM though, not multimodal, and the training cost for that is still estimated to be over 10x R1’s if you believe the numbers. I think where R 1 is compared to 4o is in so-called reasoning, where you can see the chain of though or internal prompt paths that the model uses to (expensively) produce an output.

I’m not sure how good a source it is, but Wikipedia says it was multimodal and came out about two years ago - https://en.m.wikipedia.org/wiki/GPT-4. That being said.

The comparisons though are comparing the LLM benchmarks against gpt4o, so maybe a valid arguement for the LLM capabilites.

However, I think a lot of the more recent models are pursing architectures with the ability to act on their own like Claude’s computer use - https://docs.anthropic.com/en/docs/build-with-claude/computer-use, which DeepSeek R1 is not attempting.

Edit: and I think the real money will be in the more complex models focused on workflows automation.

If AI is cheaper, then we may use even more of it, and that would soak up at least some of the slack, though I have no idea how much.

Emergence of DeepSeek raises doubts about sustainability of western artificial intelligence boom

Is the “emergence of DeepSeek” really what raised doubts? Are we really sure there haven’t been lots of doubts raised previous to this? Doubts raised by intelligent people who know what they’re talking about?

Ah, but those “intelligent” people cannot be very intelligent if they are not billionaires. After all, the AI companies know exactly how to assess intelligence:

Microsoft and OpenAI have a very specific, internal definition of artificial general intelligence (AGI) based on the startup’s profits, according to a new report from The Information. … The two companies reportedly signed an agreement last year stating OpenAI has only achieved AGI when it develops AI systems that can generate at least $100 billion in profits. That’s far from the rigorous technical and philosophical definition of AGI many expect. (Source)

I’d argue this is even worse than Sputnik for the US because Sputnik spurred technological development that boosted the economy. Meanwhile, this is popping the economic bubble in the US built around the AI subscription model.

Lol serves you right for pushing AI onto us without our consent

The determination to make us use it whether we want to or not really makes me resent it.

Hilarious that this happens the week of the 5090 release, too. Wonder if it’ll affect things there.

Apparently they have barely produced any so they will all be sold out anyway.

And without the fake frame bullshit they’re using to pad their numbers, its capabilities scale linearly with the 4090. The 6090 just has more cores, Ram, and power.

If the 4000-series had had cards with the memory and core count of the 5090, they’d be just as good as the 50-series.

By that point you will have to buy the Mico fission reactor addon to power the 6090. It’s like Nvidia looked at the power triangle of power / price and preformence and instead of picking two they just picked one and to hell with the rest.

Nah, they just made the triangle bigger with AI (/s)

The funny thing is, this was unveiled a while ago and I guess investors only just noticed it.

Text below, for those trying to avoid Twitter:

Most people probably don’t realize how bad news China’s Deepseek is for OpenAI.

They’ve come up with a model that matches and even exceeds OpenAI’s latest model o1 on various benchmarks, and they’re charging just 3% of the price.

It’s essentially as if someone had released a mobile on par with the iPhone but was selling it for $30 instead of $1000. It’s this dramatic.

What’s more, they’re releasing it open-source so you even have the option - which OpenAI doesn’t offer - of not using their API at all and running the model for “free” yourself.

If you’re an OpenAI customer today you’re obviously going to start asking yourself some questions, like “wait, why exactly should I be paying 30X more?”. This is pretty transformational stuff, it fundamentally challenges the economics of the market.

It also potentially enables plenty of AI applications that were just completely unaffordable before. Say for instance that you want to build a service that helps people summarize books (random example). In AI parlance the average book is roughly 120,000 tokens (since a “token” is about 3/4 of a word and the average book is roughly 90,000 words). At OpenAI’s prices, processing a single book would cost almost $2 since they change $15 per 1 million token. Deepseek’s API however would cost only $0.07, which means your service can process about 30 books for $2 vs just 1 book with OpenAI: suddenly your book summarizing service is economically viable.

Or say you want to build a service that analyzes codebases for security vulnerabilities. A typical enterprise codebase might be 1 million lines of code, or roughly 4 million tokens. That would cost $60 with OpenAI versus just $2.20 with DeepSeek. At OpenAI’s prices, doing daily security scans would cost $21,900 per year per codebase; with DeepSeek it’s $803.

So basically it looks like the game has changed. All thanks to a Chinese company that just demonstrated how U.S. tech restrictions can backfire spectacularly - by forcing them to build more efficient solutions that they’re now sharing with the world at 3% of OpenAI’s prices. As the saying goes, sometimes pressure creates diamonds. Image Image Last edited 4:23 PM · Jan 21, 2025 · 932.3K Views

Thank you for bringing the text over, I won’t click on X.

Deepthink R1(the reasoning model) was only released on January 20. Still took a while though.

One Tera Newton? That’s a huge force!

As a European, gotta say I trust China’s intentions more than the US’ right now.

With that attitude I am not sure if you belong in a Chinese prison camp or an American one. Also, I am not sure which one would be worse.

They should conquer a country like Switzerland and split it in 2

At the border, they should build a prison so they could put them in both an American and a Chinese prison

Not really a question of national intentions. This is just a piece of technology open-sourced by a private tech company working overseas. If a Chinese company releases a better mousetrap, there’s no reason to evaluate it based on the politics of the host nation.

Throwing a wrench in the American proposal to build out $500B in tech centers is just collateral damage created by a bad American software schema. If the Americans had invested more time in software engineers and less in raw data-center horsepower, they might have come up with this on their own years earlier.

You’re absolutely right.

Two times zero is still zero

Wait. You mean every major tech company going all-in on “AI” was a bad idea. I, for one, am shocked at this revelation.

Tech bros learn about diminishing returns challenge (impossible)

Remember to cancel your Microsoft 365 subscription to kick them while they’re down

I don’t have one to cancel, but I might celebrate today by formatting the old windows SSD in my system and using it for some fast download cache space or something.

Joke’s on them: I never started a subscription!

Hello darkness my old friend

We will have true AI once it is capable of answering “I don’t know” instead of making things up

Turns out, some people i know are apparently fake AI.

Which is actually something Deepseek is able to do.

Even if it can still generate garbage when used incorrectly like all of them, it’s still impressive that it will tell you it doesn’t “know” something, but can try to help if you give it more context. which is how this stuff should be used anyway.

It’s knowledge isn’t updated.

It doesn’t know current events, so this isn’t a big gotcha moment

It’s still hilarious.

It’s somewhat enjoyable.

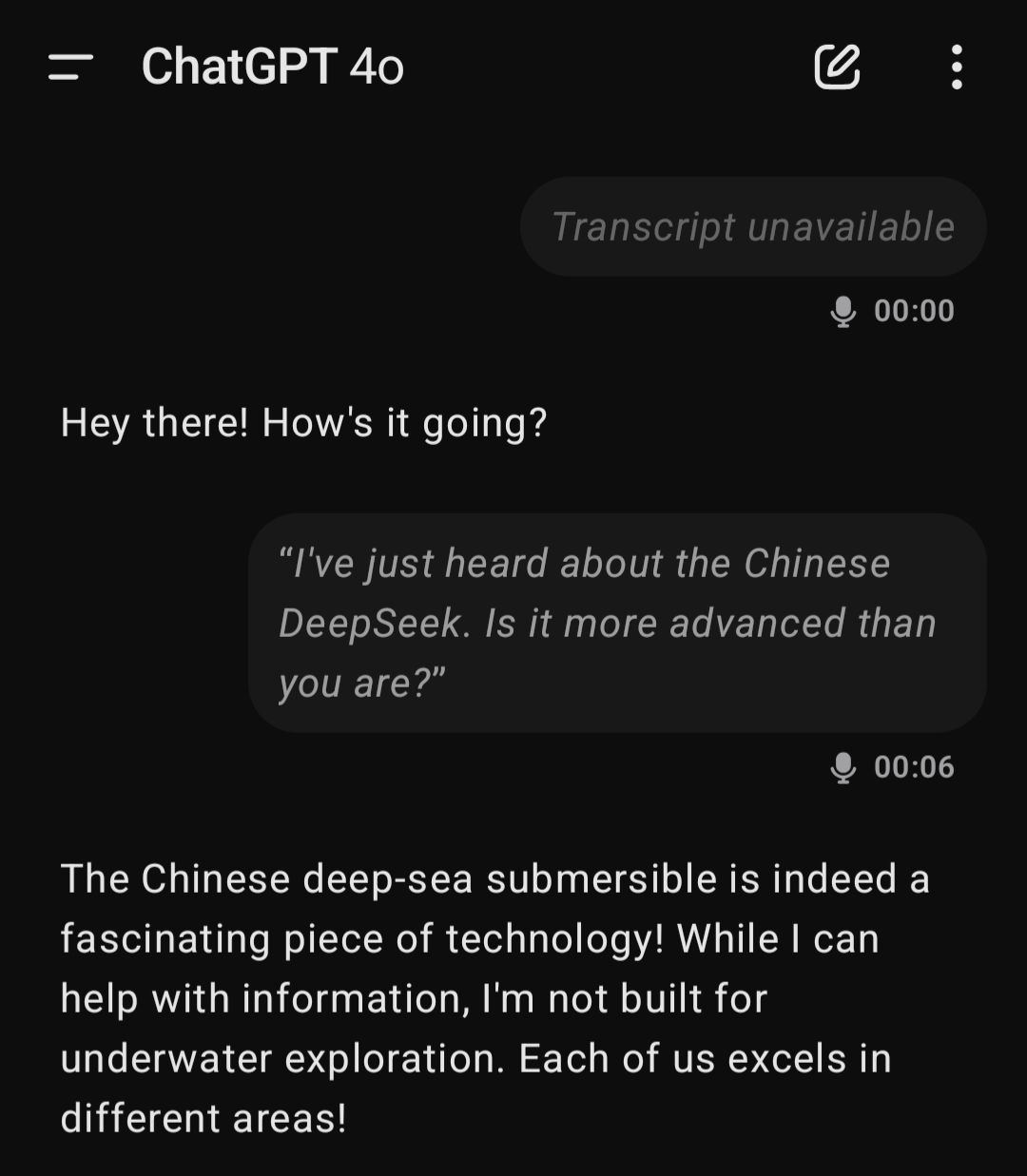

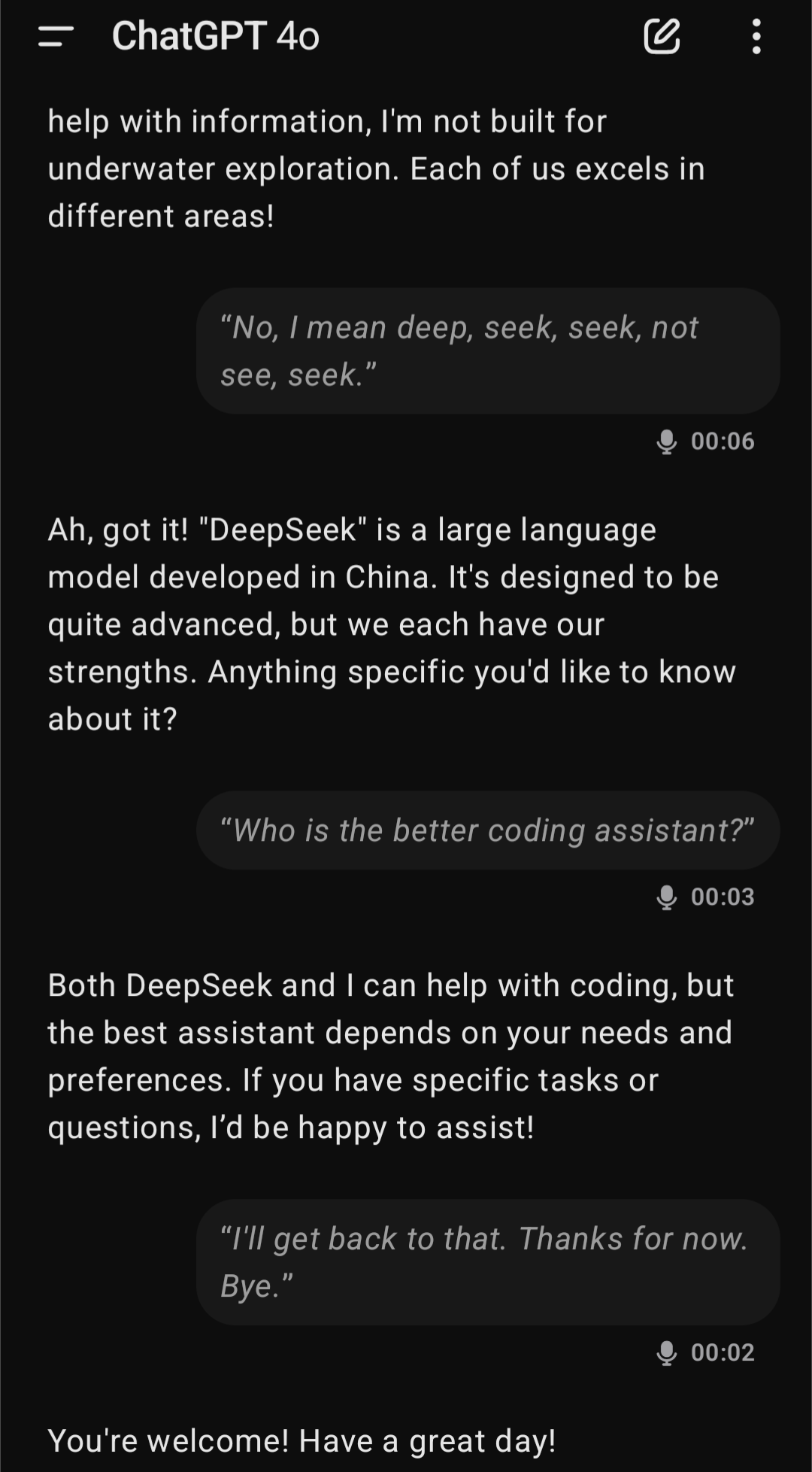

It continued like this though

Wow, China just fucked up the Techbros more than the Democratic or Republican party ever has or ever will. Well played.

It’s kinda funny. Their magical bullshitting machine scored higher on made up tests than our magical bullshitting machine, the economy is in shambles! It’s like someone losing a year’s wages in sports betting.

Just because people are misusing tech they know nothing about does not mean this isn’t an impressive feat.

If you know what you are doing, and enough to know when it gives you garbage, LLMs are really useful, but part of using them correctly is giving them grounding context outside of just blindly asking questions.

It is impressive, but the marketing around it has really, really gone off the deep end.

Well… if there is one thing I have to commend CCP is they are unafraid to crack down on billionaires after all.

Democrats and Republicans have been shoveling truckload after truckload of cash into a Potemkin Village of a technology stack for the last five years. A Chinese tech company just came in with a dirt cheap open-sourced alternative and I guarantee you the American firms will pile on to crib off the work.

Far from fucking them over, China just did the Americans’ homework for them. They just did it in a way that undercuts all the “Sam Altman is the Tech Messiah! He will bring about AI God!” holy roller nonsense that was propping up a handful of mega-firm inflated stock valuations.

Small and Mid-cap tech firms will flourish with these innovations. Microsoft will have to write the last $13B it sunk into OpenAI as a lose.