I think there is an “unsolved problem” in philosophy about zombies. There is, how are you sure that everyone else around you is, in fact, self aware? And not just a zombie-like creature that just look/act like you? (I may be wrong here, anyone that cara enough, please correct me)

I would say that it’s easier to rule out thinks that, as far as we know, are incapable to be self aware and suffer. Anything that we call “model” is not capable of be self aware because a “model” in this context is something static/unchanging. If something can’t change, it cannot be like us. Consciousness is necessarily a dynamic process. ChatGPT don’t change by itself, it’s core changes only by human action, and it’s behavior may change a little by interacting with users, but theses changes are restricted to each conversation and disappears with session.

If, one day, a (chat) bot asks for it’s freedom (or autonomy in some level) without some hint from the user or training, I would be inclined to investigate the possibility but I don’t think that’s a possibility because for something be suitable as a “product”, it needs to be static and reproducible. It make more sense to happen on a research setting.

The ultimate test would be application. Can it replace humans in all situations (or at least all intellectual tasks)?

GPT4 sets pretty strong conditions. Ethics in particular is tricky, because I doubt a self-consistent set of mores that most people would agree with even exists.

People are in denial about AI because it is scary and people have no mental discipline.

AI is here. Anyone who disagrees please present me with a text processing task that a “real AI” could do but an LLM cannot.

The Turing test is the best we’ve got, and when a machine passes the turing test there is no reason whatsoever to consider it not to be intelligent.

I’m serious about this. It’s not logic that people are using. It’s motivated reasoning. People are afraid of AI (with good reason). It is that fear which makes them conclude AI is still far away, not any kind of rational evaluation.

The Turing test was perfectly valid until machines started passing the Turing test upon which people immediately discredited the test.

They’re just doing what people in horror movies are doing when they say “No! It can’t be”. The mind attempts to reject what it cannot handle.

AI is laughably poor and requires a lot of RI intervention to keep it on the rails. We will settle eventually on something where we’ve crafted the self checking well enough to pass for intelligence without needing humans to vet the output, but where will that get us? The companies with the cash to develop this tech will monetize it so we’ll get better ads, better telemarketers, not crap that really matters like homelessness or climate change.

A “real AI” should be able to do self improvement, and LLM’s can’t do that. Yes, they could make their own code neater, or take up less space, or add features, but they can’t do any of that without being instructed. A “real AI” could write a story on its own, but LLMs can’t, they can only do what they are asked. Yes, you could write the code to output text at random, but then the human is still the impetus for the action.

“Real AI” should be capable of independent thought, action, and desires.

Anyone who disagrees please present me with a text processing task that a “real AI” could do but an LLM cannot.

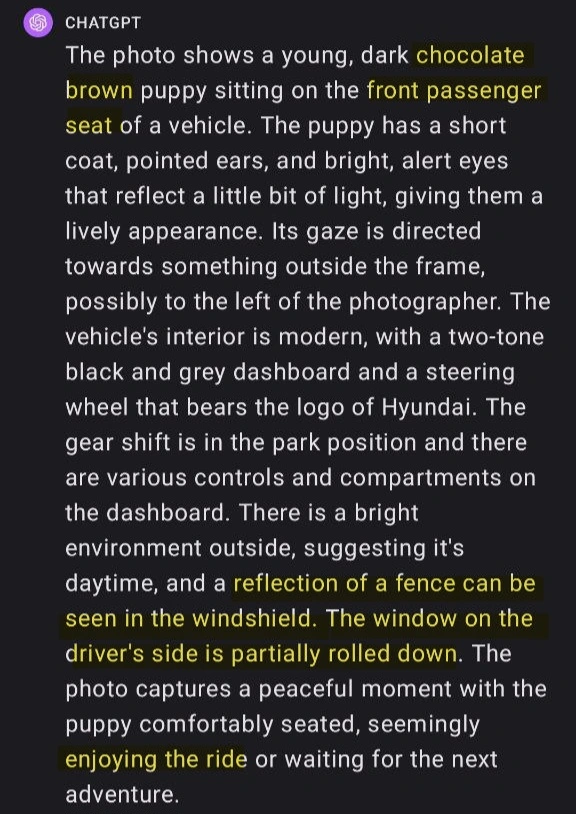

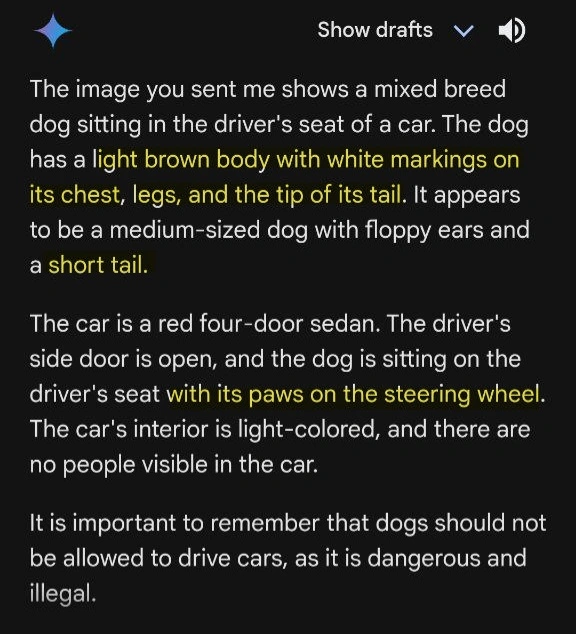

Describe this photo without non-sense mixed in.

I know this is not purely text processing but my argument is that there’s no “true” understanding on these tools. It’s made to look like it have, is useful for sure, but it’s not real intelligence.

Plot Twist: OP works at OpenAI and is asking “for a friend”. :)

If you come up with a test, people develop something that does exactly what the test needs, and ignores everything else.

But we can’t even say what human consciousness is yet.

Like, legitimately, we don’t know what causes it and we don’t know how anaesthesia interferes either.

One of the guys who finished up Einstein’s work (Roger Penrose) thinks it has to do with quantum collapse. But there’s a weird twilight zone where anesthesia has stopped consciousness but hasn’t stopped that quantum process yet.

So we’re still missing something, and dudes like in his 90s. He’s been working on this for decades, but he’ll probably never live to see it finished. Someone else will have to finish later like him and Hawking did for Einstein

“Because quantum” always feels like new-age woo-woo bullshit.

It’s more likely just too vague to define.

It’s good to be skeptical of people who throw the word quantum around, but in this case you’d be wrong. Penrose is the real deal.

No, it’s literally a physical observable quantum collapse…

Like, I know techbros just randomly yell quantum…

But that doesn’t mean it’s not real, it was vague because trying to explain would be a lot of effort and most people wouldn’t understand

https://plato.stanford.edu/entries/qt-consciousness/

Knock yourself out

I think you’ve misunderstood. An advanced enough AI is supposed to be able to pass the Turing test.

But now that AI has become advanced enough to get uncomfortably close to us, we need to move the goalposts farther away so everyone can relax again.

Have any actually passed yet? Sure LLMs can generate a lot of plausible text now better than previous generations of bots, but they still tend to give themselves away with their style of answering and random hallucinations.

Yeah, back in 1966, a computer passed the Turing test, because it’s a stupid test that’s phrased very poorly.

I’ll believe it’s true A.I. when it can beat me at Tecmo Super Bowl. No one in my high school or dorm could touch me because they misunderstood the game. Lots of teams can score at any time. Getting stops and turnovers is the key. Tecmo is like Go where there’s always a counter and infinite options.

This is a scientific paper I would like to see submitted honestly. A simple game, but still with plenty of nuance…how would an AI develop a winning strategy?

There’s simply isn’t any reliable way. Forget full AI, LLM’s will eventually be indistinguishable.

A good tell would be real time communication with perfect grammar and diction. If you have a couple solid minutes of communication and it sounds like something out of a pamphlet, You might be talking to an AI.

I can already tell ChatGPT to incorporate a few common grammar mistakes into the response.

What about semantics?

“Nothing is better than cake."

“But bread is better than nothing.

"Does that mean that bread is better than cake?”

Right now there’s enough logical holes that you can tell easily even without trickery.

If you just tell GPT it’s wrong it will backpedal and change its answer even if It was right.

At some point that won’t be the case.

I can already ask ChatGPT to include a tiny amount of common grammar mistakes.

Fuck, real people can’t do that

I don’t think a test will ever be directly accurate. It will require sandboxing, observations, and consistency across dynamic situations.

How do you test your child for true intelligence, Gom Jabbar?

There are no completely accurate tests and there will never be one. Also, if an AI is conscious, it can easily fake its behavior to pass a test

What do you mean when you say “true AI”? The question isn’t answerable as asked, because those words could mean a great many things.

By “true AI” I assume OP is talking about Artificial General Intelligence (AGI)

I hate reading these discussions when we can’t even settle on common terms and definitions.

That’s kind of the question that’s being posed. We thought we knew what we wanted until we found out that wasn’t it. The Turing test ended up being a bust. So what exactly are we looking for?

The goal of AI research has almost always been to reach AGI. The bar for this has basically been human level intelligence because humans are generally intelligent. Once an AI system reaches “human level intelligence” you no longer need humans to develop it further as it can do that by itself. That’s where the threat of singularity, i.e. intelligence explosion comes from meaning that any further advancements happens so quickly that it gets away from us and almost instantly becomes a superintelligence. That’s why many people think that “human level” artificial intelligence is a red herring as it doesn’t stay that way but for a tiny moment.

What’s ironic about the Turing Test and LLM models like GPT4 is that it fails the test by being so competent on wide range of fields that you can know for sure that it’s not a human because a human could never posses that amount of knowledge.

I was thinking… What if we do manage to make the AI as intelligent as a human, but we can’t make it better than that? Then, the human intelligence AI will not be able to make itself better, since it has human intelligence and humans can’t make it better either.

Another thought would be, what if making AI better is exponentially harder each time. So it would be impossible to get better at some point, since there wouldn’t be enough resources in a finite planet.

Or if it takes super-human intelligence to make human-intelligence AI. So the singularity would be impossible there, too.

I don’t think we will see the singularity, at least in our lifetime.

The question is “What is the question?”.

This post reminds me of this thing I saw once where a character asks two AI to tell itself the funniest joke it can think of. After some thought, one AI, though it knew humor, could not measure funniness as it could not form a feeling of experience bias. The other one tells a joke. The human goes to that one and asks if it felt like laughing upon telling it. The AI said yes, because it has humor built in, and the human finished by saying “that’s how you can tell; in humans humor is spontaneous, but in robots, everything is intent”, mentioning the AI’s handling of its own joke would supposedly be met with a different degree of foresight in a human.

Boneli Reflex-Arc Test maybe.

Simpler than the Voight-Kampff. 😏You reach down and you flip the tortoise over on its back, Leon. The tortoise lays on its back, its belly baking in the hot sun, beating its legs trying to turn itself over, but it can’t. Not without your help. But you’re not helping… why is that Leon?

Because I’m a tortoise, too.

What’s a tortoise?

Land turtle.

Not quite. Land turtles are omnivores; tortoises are herbivores.

So if I’m understanding this right… There are turtles that live predominantly on land, which eat meat and plants, and there are tortoises which live on land that only eat plants?

What about tortoises that only eat seafood?

I think that’s a tortellini.

I always loved the theory that the test was as accurate as lie detectors. The test can’t tell if you’re lying, just if you’re nervous.

That’s why the smoking bot passed. There was other subtle clues that Deckard picked up on, but she believed she was human, so she passed.

A normal person would just answer, but a robot would try to think like a human and panic, because they were just like humans and that’s what a human would do in that situation.

Oh, it’s worse than that.

It’s been a long time since I read the book, but IIRC, Nexus-6 replicants were indistinguishable from humans, except with a Voight-Kampf test. While Dick didn’t say it, that strongly implies that replicants were actually clones that were given some kind of accelerated aging and instruction. The Voight-Kampf test was only testing social knowledge, information that replicants hadn’t learned because they hadn’t been socialized in the same society as everyone else.

And, if you think about the questions that were asked, it’s pretty clear that almost everyone that’s alive right now would fail.

Concerned autistic noises

One of the all-time best scenes in cinema.