The triple whammy of semiconductor shortage, pandemic and cryptocunts has really fucked PC gaming for a generation. The price is way out of line with the capabilities compared to a PS5.

I’m still on a 1060 for my PC, and it’s only my GSync monitor that saves it. Variable frame rates really is great for all PC games tbh. You don’t have to frig about with settings as much because Opening Bare Area runs at 60fps, but the later Hall of a Million Alpha Effects runs at 30. You just let it rip between 40 and 80, no tearing, and fairly even frame pacing. The old “is this game looking as good as it can on my hardware while still playing smoothly?” question goes away, because you just get extra frames instead, and just knock the whole thing down one notch when it gets too bad. I’m spending more time playing and less time tweaking and that can only be a good thing.

I’m just clutching my pre-covid, pre-shortage GTX 1080ti. Hoping it’ll keep powering through a little longer. Honestly, it’s an amazing card. If it ever dies on me or becomes too obsolete, I’ll frame it and hang it on my wall.

I just wish AMD cards were better at ray tracing and “work” than Nvidia cards. Otherwise I’d have already splurged on an AMD if I could.

GPU prices being affordable is definitely not a priority of AMD’s. They price everything to be barely competitive with the Nvidia equivalent. 10-15% cheaper for comparable raster performance but far worse RT performance and no DLSS.

Which is odd because back when AMD was in a similar performance deficit on the CPU front (Zen 1, Zen+, and Zen 2), AMD had absolutely no qualms or (public) reservations about pricing their CPUs where they needed to be. They were the value kings on that front, which is exactly what they needed to be at the time. They need that with GPUs and just refuse to go there. They follow Nvidia’s pricing lead.

Corporations are not our friends. 🤷♂️

something many people overlook is how intertwined nvidia, intel and amd are. not only does the personnel routinely switch between those companies but they also have the same top share holders. there’s no natural competition between them. it’s like a choreograhped light saber fight where all of them are swinging but none seem to have any intention to hit flesh. a show to make sure nobody says the m word.

…mayfabe?

They’re cycling out the old curse words. The Carlin ones are now fine. The new list is:

- Monopoly

- Union

- Rights

- Child labor

Put em all together and we’re getting “murc’d”

I agree, it’s just strange from a business perspective too. Obviously the people in charge of AMD feel that this is the correct course of action, but they’ve been losing ground for years and years in the GPU space. At least as an outside observer this approach is not serving them well for GPU. Pricing more aggressively today will hurt their margins temporarily but with such a mindshare dominated market they need to start to grow their marketshare early. They need people to use their shit and realize it’s fine. They did it with CPUs…

Say it loud and say it proud, cooperations are no one’s friend!

100%. Outside of brand loyalty, I just simply don’t see any reason to buy AMD’s higher tier GPUs over Nvidia right now. And that’s coming from a long, long time AMD fan.

Sure, their raster performance is comparable at times, but almost never actually beats out similar tiers from Nvidia. And regardless, DLSS virtually nullifies that, especially since the vast majority of games for the last 4 years or so now support it. So I genuinely don’t understand AMD trying to price similarly to Nvidia. Their high end cards are inferior in almost every objective metric that matters to the majority of users, yet still ask for $1k for their flagship GPU.

Sorry for the tangent, I just wish AMD would focus on their core demographic of users. They have phenomenal CPUs and middling GPUs, so target your demographics accordingly, i.e. good value budget and mid-tier GPUs. They had that market segment on complete lockdown during the RX 580 era, I wish they’d return to that. Hell, they figured it out with their console APUs. PS5/XSX are crazy good value. Maybe their next generation will shift that way in their PC segment.

It’s especially egregious with high end GPUs. Anyone paying >$500 for a GPU is someone that wants to enable ray tracing, let alone at a $1000. I don’t get what AMD is thinking at these price points.

FSR being an open feature is great in many ways but long-term its hardware agnostic approach is harming AMD. They need hardware accelerated upscaling like Nvidia and even Intel. Give it some stupid name similar name (Enhanced FSR or whatever) and make it use the same software hooks so that both versions can run off the same game functions (similar to what Intel did with XeSS).

AMD still has better Linux support for now, which is about 90% of the reason I went with them for now.

If you’re running Linux there’s only one option

I have zen 2 and the apu is good enough for me, high end shit is always ridiculous.

AMD’s had some buggy drivers and misleading graphs, but they’re overall infinitely more consumer-friendly than Nvidia

It is the lesser of two evils imo. Not saying that AMD is any good, their alternatives are just that bad.

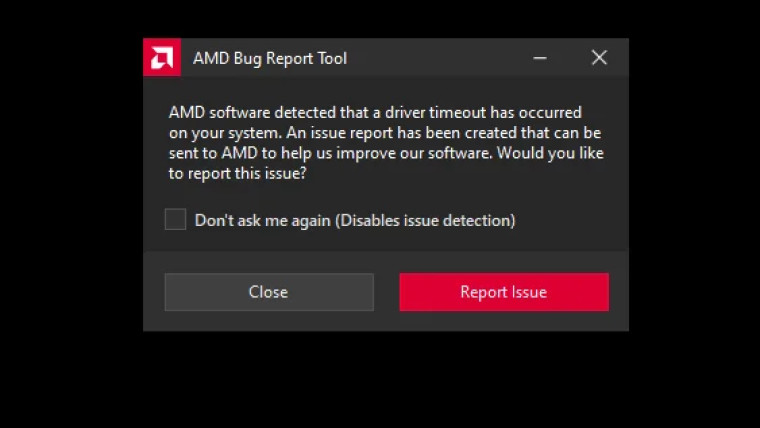

drivers have been solid for years now

Good one!

deleted by creator

AMD is the only real option for those of us using Linux. Nvidia’s weirdnesses regularly fill up support tickets on Linux forums it’s not even funny

I’ve been using Linux on my desktop since 1995, have used a lot of nVidia cards and have yet to experience that weirdness you speak of.

I’ve been using Nvidia with Linux for a VERY long time. Currently I have computers running:

- GT1030 - two older PC

- GTX2060 Ti

- GTX 3050 Ti - laptop

They are all working fine with openSUSE Tumbleweed. I install openSUSE, add the Nvidia community repo (a couple of clicks), run updates once, and reboot. Everything just works after that. I can count maybe 3 times in the past 6 years that there was any issue at all.

Now Ubuntu and derivative… I’ve had a LOT of issues and weirdness… drivers failing, doing weird things etc.

Am I having a stroke, or does that actually say “here’s the our source code”?

what if this is just a pyschology test and we are expected to not notice and discuss amd or nvidia

Oops

we’ll let you peak 🗻

youre crazy

*your crazy

As an AMD fanboy, I approve of this.

And now, for a serious note: been running linux daily for almost 20 years and AMD machines are, per my personal experience, always smoother to install, run and maintain.

I’ve been intel w/ nvidia since 2007 on Linux. Recent trends have me thinking AMD is the way to go for my next one though. I think I’ve got so used to the rough edges of Nvidia that they stopped bothering me.

As someone who has been ignoring AMD for most of this time, (my last AMD product was something in the Athlon XP line), can I do Intel CPU w/ AMD discrete GPU?

Can I get back to you, say, in three weeks?

I’m about to put together a machine based on a AB350 chipset, with a Ryzen 5 (g series, for graphics from the start) and after that I intend to install on it a budget RX580.

If the thing doesn’t ignite or explode, I’ll gladly share the end result.

No rush whatsoever, but I’d be thrilled to hear about your results when you are done.

Yeah, this is what my wife was doing. I’m also doing the reverse: AMD CPU, NVidia GPU. I considered AMD but went NVidia mostly for the PPW on an undervolted 4070. It results in a cool, quiet, low-wattage machine that can handle anything that matters to me, which AMD GPUs still can’t match this gen even with the upcoming 7800XT they’re trying to compare against the 4070. I’d wait for some PPW analysis before making a choice depending on your needs. There’s way more to the analysis than GPU source code or even raw performance that is often overlooked.

Oh,and don’t sleep on AMD. Though I don’t feel like the AM5 platform is fully baked, Ryzen architecture is rock-solid and I fully recommend using it if your history with Athlon is what’s keeping you away. I actively avoided them for the same reason until a friend convinced me otherwise, and I’m so glad I did.

Thanks, will take a hard look when it’s time to buy again. I forgot to specify that I was explicitly discussing Linux usage also - assume same answer?

Can’t speak to that, unfortunately. But I assume there would be no issues. The devices themselves are system agnostic; Windows isn’t doing anything special to make them play nice with each other.

Yeah, AMD GPUs work great across the board no matter the CPU.

I should have specified “in Linux” more explicitly - same answer? :-)

Hey there fellow 20 year using Linux desktop Linux fanboy! Exactly the same here

I am not alone!!! Yes!

I just bought my first Nvidia card since the TNT2. Up to today I always looked for the most FPS for the money.

This time my focus was on energy efficiency, and the AMD cards suck at the moment. 4070 about 200w, 6800 about 300w. AMD really has to fix that.

Regarding DLSS: I activated it in control, and it looks… off? Edges seem unsharp, not all the time, but often, sometimes only for a second, sometimes longer. I believe it is the only game I have that has support for it, but I’m not impressed.

At OP: Brand loyality is the worst. Neither Nvidia nor AMD like you. Get the best value for your money.

Btw, Nvidia needed an account to let me use their driver. Holy shit, that’s fucked up!

There is a way around the account requirement. I uninstalled GeForce experience forever ago

Wait what?? Thank you, I will look into it, I don’t need that crap!!

When you install the drivers there is a checkbox for geforce experience. I think you need to do “custom installation” or advanced or whatever they call it instead of just clicking the install button they show.

Aaaaaaand… it’s gone! Thanks!!

You can get drivers directly from their site without an account aswell

4070 about 200w, 6800 about 300w. AMD really has to fix that.

But if you compare cards from the same generation, like the 3070 and 6800, they’re much closer. Nvidia still has the edge, but the 3070 TGP is 220W vs the 6800 at 250W.

According to TomsHardware, the RX 6800 is currently the most efficient.

Maybe, but it draws 280 watts instead of 200. That’s just too much, at least for me.

You don’t necessarily need an account to use the Nvidia drivers, just if you want automatic updates through GeForce Experience. Not saying that’s any better, in fact it’s almost as shitty, just wanted to clarify.

I just used a junk email to make an account for the auto updates.

Keen to see how FSR3 ends up looking, if it comes within decent parity to DLSS3 it’s going to be amazing, considering it’s hardware agnostic so theoretically console devs can use it to boost framerates.

AMD confirmed works on console. First impressions by Digital Foundry etc said it exceeded expectations, however they weren’t allowed to play it. Hopefully lag isn’t terrible

We’ve come a long, long way, baby.

Amd’s epyc server cpus would be like 64 Machamp. Mf is huge and requires a hell of a cooler. See them at the datacenter I work at and when I opened the server up I thought I was looking at a turbocharged car engine or something.

That’s very true, but perhaps I should have specified this is a very, very old meme (thus why we have come a long way). Probably 10-15 years old? Back when AMD really was struggling with performance issues, before they came back with the Ryzen series. Epyc servers are only like six years old, IIRC.

I’m confused, was there a time when i3 cores were better than i5?

It used to be for a while that i3 was dual core with hyper threading, where the i5 was quad core with no hyper threading, and the i7 was quad core with HT.

Oooh I see, thanks

Corporations aren’t your friend.

My rig is full AMD (5800x/5700xt), but that’s purely because they happened to be the better value at the time. The second they get a lead in the consumer GPU market (which they likely will since nvidia simply doesn’t care about it vs the ML market now) prices are going to rise again.

And don’t pretend that these prices are anything resembling affordable. That would be when you could get a legitimately mid-range card for ~$150 (rx580).

Corporations aren’t your friend.

Correct. But AMD is doing things that benefit FOSS and Linux, where as nVidia is a menace. Intel is also doing pretty decent, they just need to catch up in terms of driver features.

Honey their x80 equivalent cards are over double what they used to be. Stop praising them for doing the bare fucking minimum.

Man, I could use another $200 MSRP mid-range card. I’m also running RX5700XT (for $430!) and it’s probably going to be the first card I will use until it dies, unless there’s a reasonably priced mid-range coming out soon.

I have that card too but i think it was closer to 500 when i bought it. I felt like i got a terrible price but it was better than what folks after me had to deal with. Itcs still a great card and i hope it outlasts this crazy price gouging.

Mine was literally the last in stock before prices went wild when covid started. I was using rx480 and didn’t really need to upgrade until Gamers Nexus published a video about GPU shortage.

My hope right now is for AMD to keep improving on FSR so this card can stay viable for more years.

Yep. I’m running a Ryzen CPU for the first time as of late last year because the 5950X was on sale and Intel had no competing options anywhere near the same price. It was 16c/32t AMD for like $220 or the same core and thread count for $560 from Intel.

That is what you have to do if you’re behind the competition. Don’t think they’ll keep this up for long if they happen to be the industry leader.

Always back the underdoge

Have I just had bad luck with my AMD products?

I’ve had four Nvidia GPU/Intel CPU computers with no issues.

I’ve had three AMD GPU/AMD CPU computers and they all have been loud and hot and slightly unstable. A bit cheaper sure, but I rather have a silent and stable experience.

This has made me see amd as the inferior lowbudget crap. But maybe I have just bought from the wrong manufacturer or something.I can’t speak of older stuff, but my Ryzen 5 5600x and RX 6800 have been great. I’ve had this pc for a year now, and only have had the GPU drivers crash twice. That is about on par with my older gtx 1070

Maybe, because cpu wise amd should be doing better than intel on heat and power consumption. Loud would have to do with the cooler you choose and wouldn’t be a function of the cpu itself. Aftermarket coolers are often better than stock and not that pricey, but will want to look into reviews for a quiet one. Amd had been cleaning intel’s clock the past few generations in cpu performance. Intel has finally caught up again and is slightly ahead in power this gen, though amd still winning a lot in efficiency and power consumption/heat and still has the best gaming cpu. Good summary here.

https://www.tomshardware.com/features/amd-vs-intel-cpus

In terms of gpu that’s gonna vary widely depending on what specific gpu and what configuration of that gpu you’re buying. Before buying I would look into specific reviews of that manufacturer if you can and not just the stock gpu itself, because every one is going to have a different configuration and fan/cooler setup for the gpu. Unfortunately gpus from both amd and Nvidia are becoming more and more power hungry giant heat generating monsters over time.

You have just been unlucky. I’ve never had any such issue. Were you using stock CPU cooler? I’ll admit the CPU cooler that comes in the box with AMD is atrocious.

I could not even be in the same room when using the stock cooler on my 3900x that I have now.

Had to use a radiator with silentwing fans to get it acceptable.so this is also several years ago that you had this problem I don’t I think you will have those problems with a modern GPU

2020 I bought my new pc.

deleted by creator

He’s talking about noise, not thermals.

Personally my experiences rank (best to worst)

- AMD CPU Nvidia GPU

- Intel CPU Nvidia GPU

- AMD CPU AMD GPU

- Intel CPU AMD GPU

This is the general trend in my roughly two decades of having my own PCs, so your mileage may well vary, especially since some series of both CPUs and GPUs were just better/more compatible with each other than now or the other way around.

In case anyone’s curious, my current combo is Ryzen 7 3800X and RTX 3060.

You have almost the same setup as I do right now.

3900x and a 3080.

Took me four cpu fan switches until I could stand being in the same room. Stock fan, sounded like a drying cabinet. BeQuiet, loud. Noctua, less loud but still loud. Im using a radiator now with silentwing fans and it’s still slightly too loud for my taste (and louder than any Intel I’ve ever had).

Temps seem to be in normal range though.I went with idiotproof watercooling for the CPU and a mid-range quiet-type cabinet and it’s whisper-quiet and well within optimal temperature range even at high load.

Watercooling: Shark Gaming BloodFreezer 120 RGB

Cabinet: Cooler Master Silencio S600

Similar boat, here. AMD/ATI GPUs have never been stable or even good in my experience. Same with Intel CPUs.

So, it’s AMD CPU and NVidia GPU for me forever, moving forward, unless something catastrophic happens.

Ive only ever used amd gpus and intel cpu, and the only hardware issue ive had is one gigabyte card having a firmware bug that killed it. amd always worked great on windows for me, but on linux they suffer from crashing quite often.

I’m still using my EVGA GeForce 1070. When it’s time to upgrade, I’m going with AMD.

Only reason I don’t is because:

-

nvidia just works better on linux. Well… I heard that’s changed so this may no longer be relevant

-

I don’t think AMD GPUs work well compared to nVidia with Davinci Resolve

-

DLSS/Ray Tracing. Even though I never use ray tracing because even the first card with it couldn’t handle it 😅

- nvidia just works better on linux. Well… I heard that’s changed so this may no longer be relevant

This isn’t and has never been the case. Nvidia and AMD are comparable performance-wise on Linux these days, but since the Nvidia drivers are proprietary, they’re automatically harder to deal with than the open-source AMD drivers. For that reason alone, AMD is easier to use with Linux out of the box, because the Linux kernel has AMD drivers built in. You still have to install userspace drivers in either case, but the open-source AMD userspace drivers have outperformed Nvidia’s proprietary drivers for a long time. It’s only been within the last couple years that Nvidia’s proprietary drivers have reached parity with AMD’s open-source ones.

-

I switched to AMD because nvidia worked like dogshit on Linux. Especially when I needed Wayland.

-

I really dunno

-

FSR is the replacement. But RTX would be slower on AMD but still good enough for some people.

-

Ray tracing is about to get WAY better with DLSS 3.5…damn it AMD, why can’t you guys have borderline useless, but also really cool features :C

-

I am super happy with my 7950X3D. However, their GPU drivers still need some work for the 7900XTX.

I used to have lots of driver crashing and weirdness on my RX 580, but I’ve had mostly smooth sailing with my 6600 XT.

To be honest, I only get the driver crash at the absolute worst times now. After I did the switch to AMD from Intel and Nvidia, I did do a fresh windows install and have only had to reinstall the AMD graphics drivers about 4 times in the last couple of months. (While true, the last paragraph is not as bad as it sounds. Annoying, yes. End of the world, no.)

There is a pattern to the madness though. If I go from gaming to other GPU intensive apps used across different screens, it’s probably going to hang the driver. Not fatally, but I reboot anyway when it happens.

AMD is on the right track though. I think I have been through three different GPU drivers versions since I built the system and it is slowly getting better. I get a driver crash about once a week instead of once a day now.

It’s possible your gpu voltages are too high, aka unstable, even at stock. I was having similar problems with the 6800xt, although they were rare. Undervolted it with MorePowerTool, and haven’t had any issues since.

I have been thinking about that, actually. Adrenaline does have an undervolt option, so I’ll give that a go first. If that doesn’t work, I’ll absolutely try More PowerTool.

TBH, higher voltages and clocks with the 7900XTX are only good for benchmarks and real world performance gains are not that noticable. (I could only get between 100-200 point gains on Kombustor) Undervolting is probably going to be good for the longevity of the card anyway.

I’ve never had amd drivers crash during normal usage, 6700xt water block. Microsoft sleep mode wrecks my pc and makes it instantly crash though.

Sleep mode is rough, for sure. It’s also one of the reasons why I did a completely fresh installation of Windows. (Sleep mode was suicide.) Also, I had heard an obscure rumor that AMD chipset drivers could be picky for old windows installations. (Like, not enabling the 3D cache on the CPU kind of picky.)

But yeah, you aren’t alone with the sleep mode woes.

Four times in 2 months? Hangs every time when switching from gaming to other GPU centric apps? Jeezuz, how are people finding that acceptable? You’re paying premium money for these products, demand better from these fucks. And the comment below you isn’t any better, crashing any time when waking from sleep mode is craziness.

Stop making excuses for AMD. They’re just another soulless corporation like any other, including Nvidia’s greedy asses.

Yeah, the GPU drivers haven’t been stable. Hell, one time they just stopped working completely and failed to recognize the card. Wut?

I mainly bounce between Diablo IV, War Thunder, Fusion360, PrusaSlicer and sometimes Blender. It doesn’t always hang, but when it does, it’s because I have been moving the apps back and forth between monitors. Multi-monitor support is buggy and that is absolutely a combination of the GPU drivers and the apps.

Yeah, I paid some coin, that is for sure. It is frustrating in that regard but I knew what I was getting into with AMD drivers. The first few generations of drivers are almost always garbage with new cards and they are showing improvements over the last few iterations of drivers.

Also, yes. I am tempted to give my 7900XTX to my daughter when the NVIDIA 5000 series drops. For now, I am just tolerating the issues. (I rarely had an issue with my 3070.)

No excuses here! The CPU is gold but the GPU drivers are shite. I am an extremely patient person though, so that helps.

I commend you on your zen master level of patience, haha. I’m only patient up to a degree and then it all goes out the window, heh.

I’m guessing you have the hotkey combination for rebooting the graphics drivers without having to reboot your PC? When I had a 5700 XT, that hotkey combo was a lifesaver (drivers on that would constantly hang for me as well).

I’m fairly sure the Windows drivers are still closed source and this is referring to the situation on Linux.

Some of the points in the meme do stretch across OS’s.

I switched from windows to Linux with my 7900XT and went from some GPU crashes to none

My problem when buying my last GPU is that AMD’s answer to CUDA, ROCm, was just miles behind and not really supported on their consumer GPUs. From what I se now that has changed for the better, but it’s still hard to trust when CUDA is so dominant and mature. I don’t want to reward NVIDIA, but I want to use my GPU for some deep learning projects too and don’t really have a choice at the moment.

I’ve become more and more convinced that considerations like yours, which I do not understand since I don’t rely on GPUs professionally, have been the main driver of Nvidia’s market share. It makes sense.

The online gamer talk is that people just buy Nvidia for no good reason, it’s just internet guys refusing to do any real research because they only want a reason to stroke their own egos. This gamer-based GPU market is a loud minority whose video games don’t seem to rely too heavily on any card features for decent performance, or especially compatibility, with what they’re doing. Thus, the constant idea that people “buy Nvidia for no good reason except marketing”.

But if AMD cards can’t really handle things like machine learning, then obviously that is a HUGE deficiency. The public probably isn’t certain of its needs when it spends $400 on a graphics card, it just notices that serious users choose Nvidia for some reason. The public buys Nvidia, just in case. Maybe they want to do something they haven’t thought of yet. I guess they’re right. The card also plays games pretty well, if that’s all they ever do.

If you KNOW for certain that you just want to play games, then yeah, the AMD card offers a lot of bang for your buck. People aren’t that certain when they assemble a system, though, or when they buy a pre-built. I would venture that the average shopper at least entertains the idea that they might do some light video editing, the use case feels inevitable for the modern PC owner. So already they’re worrying about maybe some sort of compatibility issue with software they haven’t bought, yet. I’ve heard a lot of stories like yours, and so have they. I’ve never heard the reverse. I’ve never heard somebody say they’d like to try Nvidia but they need AMD. Never. So everyone tends to buy Nvidia.

The people dropping the ball are the reviewers, who should be putting a LOT more emphasis on use cases like yours. People are putting a lot of money into labs for exhaustive testing of cooling fans for fuck’s sake, but just running the same old gaming benchmarks like that’s the only thing anyone will ever do with the most expensive component in the modern PC.

I’ve also heard of some software that just does not work without CUDA. Those differences between cards should be tested and the results made public. The hardware journalism scene needs to stop focusing so hard on damned video games and start focusing on all the software where Nvidia vs AMD really does make a difference, maybe it would force AMD to step up its game. At the very least, the gamebros would stop acting like people buy Nvidia cards for no reason except some sort of weird flex.

No, dummy, AMD can’t run a lot of important shit that you don’t care about. There’s more to this than the FPS count on Shadow of the Tomb Raider.

Well the counterpoint is that NVIDIA’s Linux drivers are famously garbage, which also pisses off professionals. From what I see from AMD now with ROCm, it seems like they’ve gone the right way. Maybe they can convince me next time I’m on the lookout for a GPU.

But overall you’re right yeah. My feeling is that AMD is competitive with NVIDIA regarding price/performance, but NVIDIA has broader feature support. This is both in games and in professional use cases. I do feel like AMD is steadily improving in the past years though. In the gaming world FSR seems almost as ubiquitous (or maybe even more ) as DLSS, and ROCm support seems to have grown rapidly as well. Hopefully they keep going, so I’ll have a choice for my next GPU.

It’s a shame there’s not really an equivalent comparison to the CUDA cores on AMD cards, being able to offload rendering to the GPU and getting instant feedback is so important when sculpting (without having to fall back to using eevee)