If you own an Intel motherboard with a Z170 or Z270 chipset, you might believe that it only supports CPUs up to Intel’s 7th generation, known as Kaby Lake. Even the CPU socket’s pinout …

They’ve been pulling this shit since the early days. Similar tricks were employed in the 486 days to swap out chips, and again in the Celeron days. I think they switched to the slot style intentionally to keep selling chips to a point lol

IIRC, the slot CPU thing was because they wanted to get the cache closer to the processor, but hadn’t integrated it on-die yet. AMD did the same thing with the original Athlon.

On a related note, Intel’s anticompetitive and anti- consumer tactics are why I’ve been buying AMD since the K6-2.

They had integrated the L2 on-die before that already with the Pentium Pro on Socket 8. IIRC the problem was the yields were exceptionally low on those Pentium Pros and it was specifically the cache failing. So every chip that had bad cache they had to discard or bin it as a lower spec part. The slot and SECC form factor allowed them to use separate silicon on a larger node by having the cache still be on-package (the SECC board) instead of on-die.

i dream of a world where the process will cheapen out enough like pcb design, where you can just submit the design you want and they will fab it out for you.

with more players coming into the game because of sanctions, i hope we are now on the path.

Yes, i hope so too, as for now, semiconductor lithography at home is impossible due how big and complex these machines are, so i have same opinion as you are

Soviet Computer Technology: Little Prospect for Catching Up

We believe that there are many reasons why the Soviets trail the United States in computer technology:

The Soviets’ centrally-planned economy does not permit adequate flexibility to design or manufacturing changes frequently encountered in computer production; this situation has often resulted in a shortage of critical components — especially for new products.

Marginally. The paper analyzes the capabilities as they existed in the 1980s, but doesn’t draw strong conclusions as to why that may be. It does demonstrate how reliance on central planning results in inadequaciea when said central planning is not operating well, though.

The paper doesn’t really mention it but the central planning of the USSR was actively reeling from Brezhnev dying, Andropov dying, and Chernenko either dying or about to die at the time the CIA thing was written. So yeah, correct is an accurate if imprecise way to put it.

Intel is well known for requiring a new board for each new CPU generation, even if it is the same socket. AMD on the other hand is known to push stuff to its physical limits before they break compatibility.

But why? Did Intel make a deal with the board manufacturers? Is this tradition from the days when they build boards themselves?

I thought they just didn’t care and wanted as little restrictions for their chip design as possible, but if this actually works without drawbacks, that theory is out the window.

Intel also sells the chipset and the license to the chipset software; the more boards get sold, the more money they make (as well as their motherboard partners, who also get to sell more, which encourages more manufacturers to make Intel boards and not AMD)

Just another instance of common anti-consumer behavior from multi billion dollar companies who have no respect for the customers that line their pockets.

There are many motherboard manufactors but only 2 CPU manufacturers (for PC desktop). Board makers don’t “makes deals” so much as have the terms dictated to them. Even graphics card manufacturers made them their bitch back when multi-GPU was a thing - it was them who had to sell their Crossfire/SLL technology on their motherboards.

ANTI UPGRADE?? WHAT THE FUCK

No, “user security”.

They’ve been pulling this shit since the early days. Similar tricks were employed in the 486 days to swap out chips, and again in the Celeron days. I think they switched to the slot style intentionally to keep selling chips to a point lol

IIRC, the slot CPU thing was because they wanted to get the cache closer to the processor, but hadn’t integrated it on-die yet. AMD did the same thing with the original Athlon.

On a related note, Intel’s anticompetitive and anti- consumer tactics are why I’ve been buying AMD since the K6-2.

They had integrated the L2 on-die before that already with the Pentium Pro on Socket 8. IIRC the problem was the yields were exceptionally low on those Pentium Pros and it was specifically the cache failing. So every chip that had bad cache they had to discard or bin it as a lower spec part. The slot and SECC form factor allowed them to use separate silicon on a larger node by having the cache still be on-package (the SECC board) instead of on-die.

AMD followed suit for the memory bandwidth part from the K62 architecture. Intel had no reason to do so.

me when capitalism

thats why we are in dire need of open source hardware.

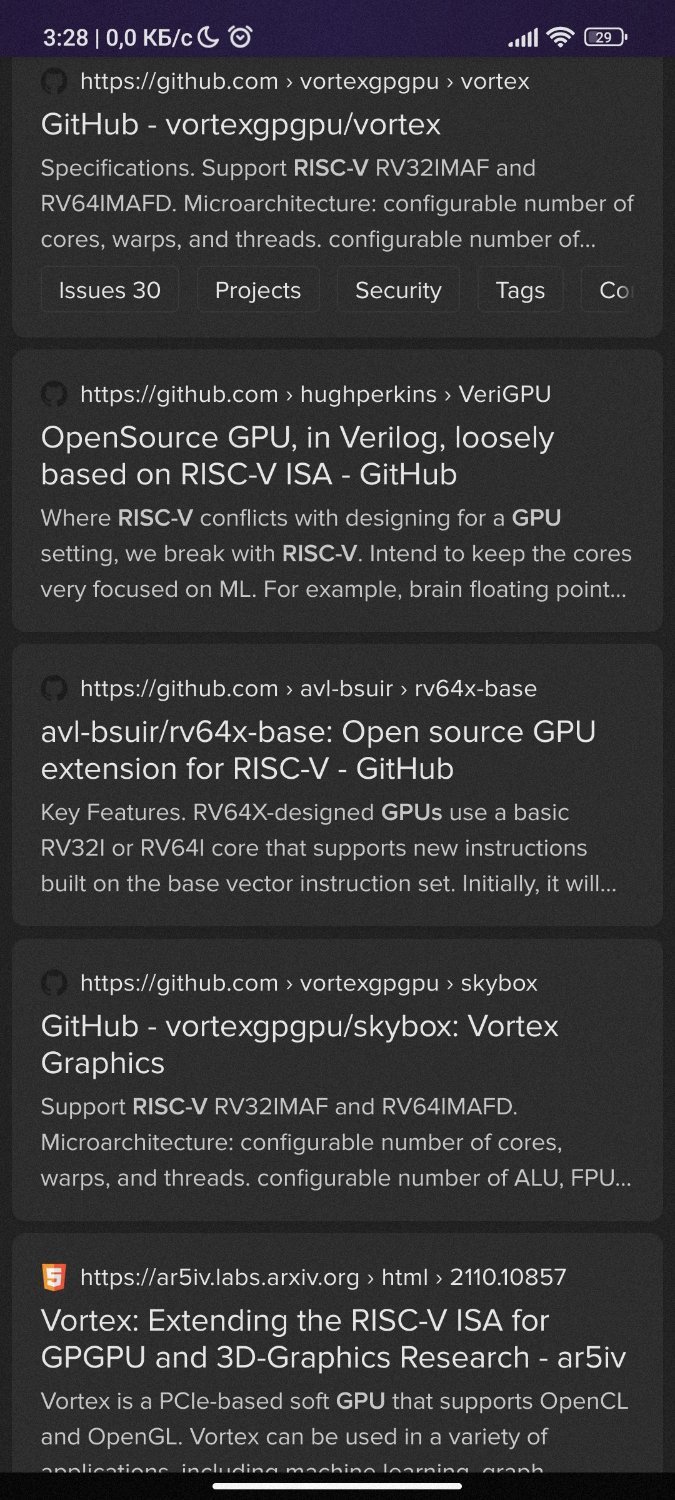

We have open source designs (RISCV also have GPU designs) but we don’t have manufacture power open sourced yet

Are there any projects to develop that capability that you know of?

No, there isn’t yet, there’s the most i could find, but it’s not machines

i dream of a world where the process will cheapen out enough like pcb design, where you can just submit the design you want and they will fab it out for you.

with more players coming into the game because of sanctions, i hope we are now on the path.

Yes, i hope so too, as for now, semiconductor lithography at home is impossible due how big and complex these machines are, so i have same opinion as you are

https://www.cia.gov/readingroom/docs/DOC_0000498114.pdf

Thanks for the link to the unbiased study by… the CIA? Huh. Yeah I trust them.

The paper was from 1985. Was the CIA correct?

Marginally. The paper analyzes the capabilities as they existed in the 1980s, but doesn’t draw strong conclusions as to why that may be. It does demonstrate how reliance on central planning results in inadequaciea when said central planning is not operating well, though.

The paper doesn’t really mention it but the central planning of the USSR was actively reeling from Brezhnev dying, Andropov dying, and Chernenko either dying or about to die at the time the CIA thing was written. So yeah, correct is an accurate if imprecise way to put it.

If you’re only response to criticism of capitalism is ((communism)), you may just be a cog in the corporate propaganda machine.

I mean they went with a literal cia link.

It’s been at least since the “big iron” days.

Technician comes out to upgrade your mainframe and it consists of installing a jumper to enable the extra features. For only a few million dollars.

Intel is well known for requiring a new board for each new CPU generation, even if it is the same socket. AMD on the other hand is known to push stuff to its physical limits before they break compatibility.

But why? Did Intel make a deal with the board manufacturers? Is this tradition from the days when they build boards themselves?

I thought they just didn’t care and wanted as little restrictions for their chip design as possible, but if this actually works without drawbacks, that theory is out the window.

Intel also sells the chipset and the license to the chipset software; the more boards get sold, the more money they make (as well as their motherboard partners, who also get to sell more, which encourages more manufacturers to make Intel boards and not AMD)

They used to dominate the consumer market prior to Ryzen so might have something to do with it but I got no evidence lol

Just another instance of common anti-consumer behavior from multi billion dollar companies who have no respect for the customers that line their pockets.

guess who sells the chipsets to the motherboard manufacturers

There are many motherboard manufactors but only 2 CPU manufacturers (for PC desktop). Board makers don’t “makes deals” so much as have the terms dictated to them. Even graphics card manufacturers made them their bitch back when multi-GPU was a thing - it was them who had to sell their Crossfire/SLL technology on their motherboards.

But otherwise upgrade parts would be too affordable!

the horror