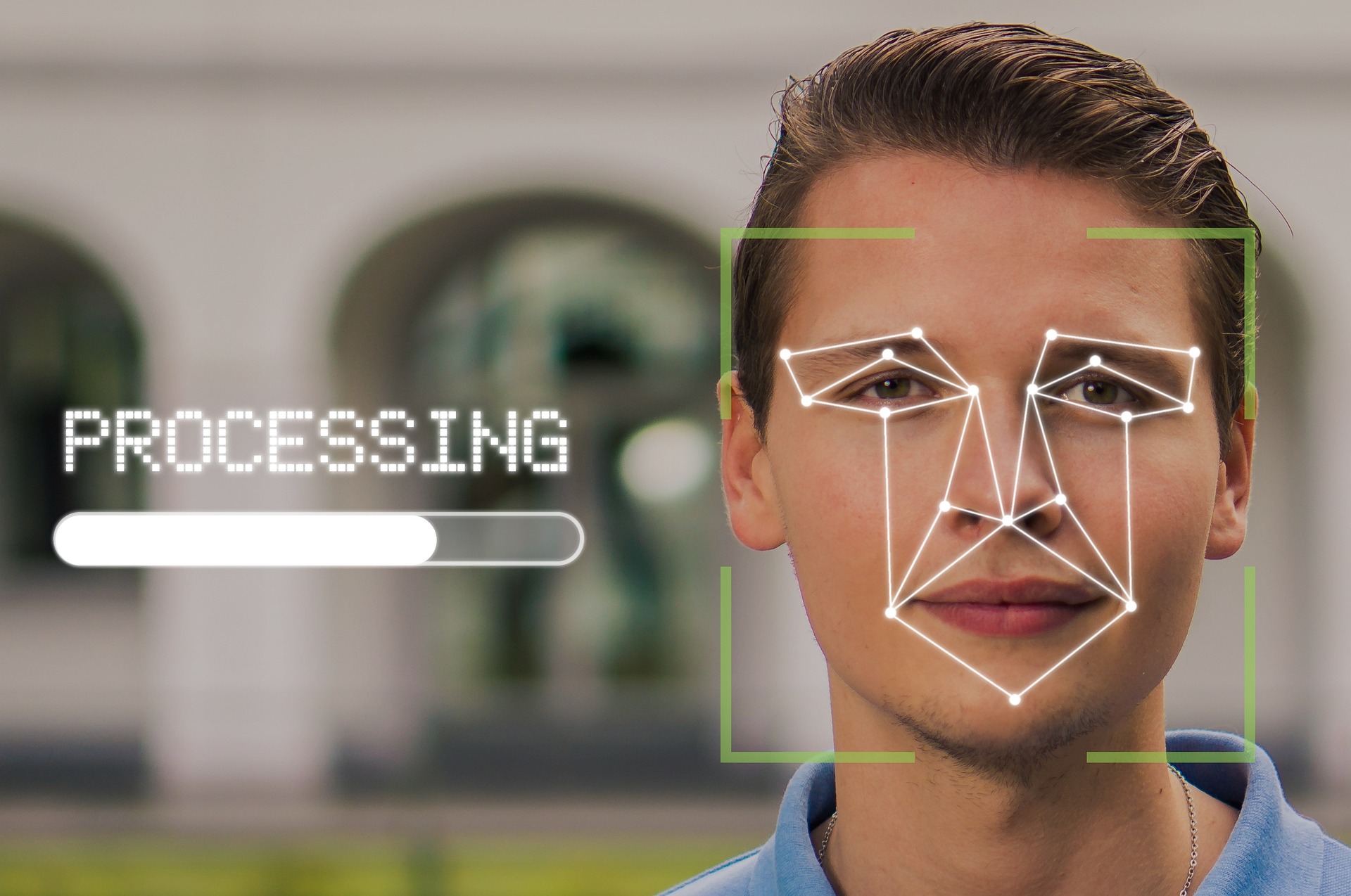

A big biometric security company in the UK, Facewatch, is in hot water after their facial recognition system caused a major snafu - the system wrongly identified a 19-year-old girl as a shoplifter.

Death to the worthless corpo world that allowed this bullshit in the first place! Towards anarchist communism and social revolution!

Please grow and change as a person

As an American, I don’t know what opinion to have about this without knowing the woman’s race.

Literally what people on Lemmy think.

If she’s been flagged as shoplifter she’s probably black!

Congratulations, you just identified yourself as a racist and need to understand you can’t just judge someone without first getting to know them.

Why, because all shoplifters are black? I don’t understand. She’s being mistaken for another person, a real person on the system.

I used to know a smackhead that would steal things to order, I wonder if he’s still alive and whether he’s on this database. Never bought anything off him but I did buy him a drink occasionally. He’d had a bit of a difficult childhood.

Because facial recognition systems infamously cannot tell black people apart.

Because the algorithm trying to identify shoplifters is probably trained on biased dataset

Why, because all shoplifters are black?

All the ones that get caught. When you’re white, you can steal whatever you want.

i think its more that all facial recognition systems have a harder time picking up faces with darker complexion (same idea why phone cameras are bad at it for example). this failure leads to a bunch of false flags. its hilariously evident when you see police jurisdictions using it and aresting innocent people.

not saying the womans of colored background, but would explain why it might be triggering.

What happens if there’s a consumer boycott of retailers who use this type of harassment tech? It’s disproportionately targeted towards minorities. I think Target was recently bullied into removing Pride items based on consumer action, so clearly mass action has an effect.

Boycotts are almost impossible to pull off successfully. This kind of thing demands legal action. IANAL but the facial recognition company putting her on a list of shoplifters is a claim that she’s a criminal, which sounds like textbook defamation to me.

For sure, I meant in addition to suing the banning retailers and the facial recognition tech company

Didn’t it turn out the items where just moved to another area of the store ?

a) you’d have to know who does it, and you might not until you (or somebody else) gets caught in the net b) boycotts are more effective if there’s choice, which there won’t be if mostly everybody starts using this shyte

More reputable sure covering this and related stories https://www.bbc.com/news/technology-69055945

Stop giving corporations the power to blacklist us from life itself.

you will sit down and be quiet, all you parasites stifling innovation, the market will solve this, because it is the most rational thing in existence, like trains, oh god how I love trains, I want to be f***ed by trains.

~~Rand

I can see the Invisible Hand of the Free Market, it’s giving me the finger.

Can it give invisible hand jobs?

Yes, but they’re in the “If you have to ask, you couldn’t afford it in three lifetimes.” price range

Darn!

Right up your ass, no less.

It charged me for lube, and I thought about paying for it, but same-day shipping was a bitch and a half… I tried second class mail, but I think my bumhole would have stretched enough for this to stop hurting before it got anywhere near close to here so I just opted for that.

The whole wide world of authors who have written about the difficulties of this new technological age and you choose the one who had to pretend her work was unpopular

go read rand, her books literally advocate for an anti-social world order, where the rich and powerful have the ability to do whatever they want without impediment as the “workers” are described as parasites that should get in line or die

Are you suggesting they shouldn’t be allowed to ban people from stores? The only problem I see here is misused tech. If they can’t verify the person, they shouldn’t be allowed to use the tech.

I do think there need to be reprocussions for situations like this.

Well there should be a limited amount of ability to do so. I mean there should be police reports or something at the very least. I mean, what if Facial Recognition AI catches on in grocery stores? Is this woman just banned from all grocery stores now? How the fuck is she going to eat?

That’s why I said this was a misuse of tech. Because that’s extremely problematic. But there’s nothing to stop these same corps from doing this to a person even if the tech isn’t used. This tech just makes it easier to fuck up.

I’m against the use of this tech to begin with but I’m having a hard time figuring out if people are more upset about the use of the tech or about the person being banned from a lot of stores because of it. Cause they are separate problems and the latter seems more of an issue than the former. But it also makes fucking up the former matter a lot more as a result.

Reddit became a ban-happy wasteland, and if the tides swing a similar way, we’ll see a society where Big Tech gates people out of the very foundation of Modern Society. It’s exclusion that I’m against.

I wish I could remember where I saw it, but years ago I read something in relation to policing that said a certain amount of human inefficiency in a process is actually a good thing to help balance bias and over reach that could occur when technology could technically do in seconds what would take a human days or months.

In this case if a person is enough of a problem that their face becomes known at certain branches of a store it’s entirely reasonable for that store to post a sign with their face saying they are aren’t allowed. In my mind it would essentially create a certain equilibrium in terms of consequences and results. In addition to getting in trouble for stealing itself, that individual person also has a certain amount of hardship placed on them that may require they travel 40 minutes to do their shopping instead of 5 minutes to the store nearby. A sign and people’s memory also aren’t permanent, so it’s likely that after a certain amount of time that person would probably be able to go back to that store if they had actually grown out of it.

Or something to that effect. If they steal so much that they become known to the legal system there should be processes in place to address it.

And even with all that said, I’m just not that concerned with theft at large corporate retailers considering wage theft dwarfs thefts by individuals by at least an order of magnitude.

Can’t wait for something like this get hacked. There’ll be a lot explaining to do.

I read this in a Ricky Ricardo voice.

Still, I think the only way that would result in change is if the hack specifically went after someone powerful like the mayor or one of the richest business owners in town.

Now now, thought we had agreed not to use facial recognition, am I going to have to collapse civilization or are you going to behave ? Last warning

But you don’t need to do anything in order to let our civilization collapse.

Ah come on ! You’re spoiling the magic trick !

How you gonna do it?

Get facial recognition to lay the heat on someone else might be a good start. Gotta game the system to destroy it.

So many ways, it’s hard to pick just one. Plus it would ruin the surprise.

This is a bad situation for her. I am genuinely curious under what standing she is suing. Thinking it through, this seems like a situation where the laws might not have caught up to what is happening. I hope she gets some changes out of this, but I am really curious on the legal mechanics of how that might happen.

Stores in most developed countries, UK included, can refuse service only for legitimate reasons, and they have to do so uniformly based on fair and unbiased rules. If they don’t, they’re at risk of an unlawful discrimination suite.

https://www.milnerslaw.co.uk/can-i-choose-my-customers-the-right-to-refuse-service-in-uk-law

She didn’t do anything that would be considered a “legitimate reason”, and although applied uniformly, it’s difficult to prove that an AI model doesn’t discriminate against protected groups. Especially with so many studies showing the opposite.

I think she has as much standing as anyone to sue for discrimination. There was no legitimate reason to refuse service, AI models famously discriminate against women and minorities, especially when it comes to “lower class” criminal behavior like shoplifting.

It’s not only that it discriminated against certain groups, but also that it in itself has a high enough error rate to make it unusable for any decision making. MAYBE to select people for screening, but we would be falling further down into a dystopian future.

At the current performance, none if these AIs should be involved in anything this critical.

I’d also wonder about a case against the company supplying the facial rec software/DB. Essentially they are fingering her as a criminal in a way that has a notable impact, which seems like a potential case for slander/libel to me (not sure what a “bad db entry” would fall under in this case).

She needs to apply for a jobs at these companies that use the software in order to generate damages she can sue over.

I am waiting to follow the case for updates, because while I hope that the outcome pushes back on AI system like this, I am skeptical of current laws to perceive what is happening as protected class discrimination. I presume in the UK the burden for proving fault in the AI lays on the plaintiff, which is at the heart of if the reason is legitimate in the eyes of the law.

This is an example of why “they’re private businesses so they can ban whoever they want for any reason” is problematic.

I’m wondering if maybe there should be a size threshold for full consent on business dealings. Like if you run a small business, you get to kick anyone out for any reason. But if your business is so big that it runs a significant portion of the market, then you would need to adhere to certain standards for how and when you ban someone from doing business with you.

A tangent to explore. I though am curious how the current case under the current laws is expecting to go forward.

‘Standing’? This isn’t the US. The law in the UK is a bit different.

In British administrative law, an applicant needs to have a sufficient interest in the matter to which the application relates

I think this woman can show that

Sufficient interest in the matter equates to standing in a general manner.

And this is why I’m asking, because I know little about UK law, and am trying to figure out how this is going to move forward. She can sue, now I wonder about the theory that leads to a win. Protected categories is a start, but it feels vague, and I’m curious what the precise angle and evidence brought in will be.

The developers should be looking at jail time as they falsely accused someone of commiting a crime. This should be treated exactly like if I SWATed someone.

I’m not so sure the blame should solely be placed on the developers - unless you’re using that term colloquially.

You can arrest their managers as well, good point.

Developers were probably the first people to say that it isn’t ready. Blame the sales people that will say anything for money.

It’s impossible to have a 0% false positive rate, it will never be ready and innocent people will always be affected. The only way to have a 0% false positive rate is with the following algorithm:

def is_shoplifter(face_scan):

return Falseline 2 return False ^^^^^^ IndentationError: expected an indented block after function definition on line 1Weird, for me the indentation renders correctly. Maybe because I used Jerboa and single ticks instead of triple ticks?

Interesting. This is certainly not the first time there have been markdown parsing inconsistencies between clients on Lemmy, the most obvious example being subscript and superscript, especially when ~multiple words~ ^get used^ or you use ^reddit ^style ^(superscript text).

But yeah, checking just now on Jerboa you’re right, it does display correctly the way you did it. I first saw it on the web in lemmy-ui, which doesn’t display it properly, unless you use the triple backticks.

In their defense, it didn’t return a false positive

They worked on it, they knew what could happen. I could face criminal charges if I do certain things at work that harm the public.

I have no idea where Facewatch got their software from. The developers of this software could’ve been told their software will be used to find missing kids. Not really fair to blame developers. Blame the people on top.

It says right on their webpage what they are about.

Developers don’t always work directly for companies. Companies pivot.

I get your point but totally disagree this is the same as SWATing. People can die from that. While this is bad, she was excluded from stores, not murdered

Difference in degree not kind

In the UK at least a SWATing would be many many times more deadly and violent than a normal police interaction. Can’t make the same argument for the USA or Russia, though.

You lack imagination. What happens when the system mistakenly identifies someone as a violent offender and they get tackled by a bunch of cops, likely resulting in bodily injury.

People should be thrown in jail over a hypothetical?

Mike judge calling it out again https://youtu.be/5d7SaO0JAHk?si=rieJnFE0YHd-_3lY

That would then be an entirely different situation?

I mean, the article points out that the lady in the headline isn’t the only one who has been affected; A dude was falsely detained by cops after they parked a facial recognition van on a street corner, and grabbed anyone who was flagged.

No, it wouldn’t be. The base circumstance is the same, the software misidentifying a subject. The severity and context will vary from incident to incident, but the root cause is the same - false positives.

There’s no process in place to prevent something like this going very very bad. It’s random chance that this time was just a false positive for theft. Until there’s some legislative obligation (such as legal liability) in place to force the company to create procedures and processes for identifying and reviewing false positives, then it’s only a matter of time before someone gets hurt.

You don’t wait for someone to die before you start implementing safety nets. Or rather, you shouldn’t.

That’s not very reassuring, we’re still only one computer bug away from that situation.

Presumably she wasn’t identified as a violent criminal because the facial recognition system didn’t associate her duplicate with that particular crime. The system would be capable of associating any set of crimes with a face. It’s not like you get a whole new face for each different possible crime. So, we’re still one computer bug away from seeing that outcome.

This happens in the USA without face recognition

Reminds me of when I joined some classmates to the supermarket. We got kicked out while waiting in line because they didn’t want middleschoolers there because we’re all thieves anyways. So must of the group walked out without paying.

People who blindly support this type of tech and AI being slapped into everything always learn the hard way when a case like this happens.

Sadly there won’t be any learning, the security company will improve the tech an continue as usual.

This shit is here to stay :/

Agreed on all points, but “improve the tech” probably belongs in quotes. If there’s no real consequences, they may just accept some empty promises and continue as before.

Just listened to a podcast with a couple of guys talking about the AI thing going on. One thing they said was really interesting to me. I’ll paraphrase my understanding of what they said:

- In 2020, people realized that the same model, same architecture, but with more parameters ie a larger version of the model, behaved more intelligently and had a larger set of skills than the same model with fewer parameters.

- This means you can trade money for IQ. You spend more money, get more computing power, and your model will be better than the other guy’s

- Your model being better means you can do more things, replace more tasks, and hence make more money

- Essentially this makes the current AI market a very straightforward money-in-determines-money-out situation

- In other words, the realization that the same AI model, only bigger, was significantly better, created a pathway for reliably investing huge amounts of money into building bigger and bigger models

So basically AI was meandering around trying to find the right road, and in 2020 it found a road that goes a long way in a straight line, enabling the industry to just floor the accelerator.

The direct relationship this model creates between more neurons/weights/parameters on the one hand, and more intelligence on the other, creates an almost arbitrage-easy way to absorb tons of money into profitable structures.

Dang… the UK got China’d.

Is it even legal? What happened to consumer protection laws?

Brexit. The EU has laws forbidding stuff like this.

I don’t think this is legal in Russia, Ukraine and Belarus either.

I’m going to think twice before enerting any big box store because of this.

I am amazed that anyone is still using them. It is the 21st century, just shop online.

Last time I ordered big boxes online, they just shipped me empty boxes. I don’t know how they screwed that up, but then I’ve always gone to big box stores so I can actually see the big boxes I’m buying.

No need to avoid such stores, just wear a protection to avoid your face from being falsely flagged.

Welcome to 2024. Where we move closer and closer to black mirror being real due to our technology improvements.

Plus our allowing sociopaths to hold so much power.

Allowing bad character to reign generally speaking. Sociopaths are like seed crystals; it’s the rest of us implanting their way of life that do most of the evil, even if they’re the ones providing the purest examples.

We need to be good people, not just nice people, in order to improve things.

Could attaching infrared leds to glasses or caps be away to prevent these systems from capturing people’s faces.

Think I saw something on black mirror once or might have been another show.

Possible business opportunity selling to crackheads lol.

I presume at that point the store would just have security walk out the person wearing the hat.

How would they know it had lights in it?

It could be a ravers led hat that isn’t turned on.

I think IR lights on glasses can blind cameras, and there are also infrared-blocking glasses that also reflect IR light from cameras back at them. So yes, adding lights or reflectors can be effective.

But we shouldn’t even have to consider these workarounds to maintain our privacy and security. And even if we start wearing these glasses or fooling the systems, governments will outlaw the use of such circumventing tech.

Our reaction to this needs to be “we will not allow this tech to be used against us, period.” Ban it for law enforcement, ban it for commerce, ban it in airports and schools.

governments will outlaw the use of such circumventing tech

They can’t stop all of us. If they outlaw using it, I’d support (or even organize) a protest group that organizes widespread use of them.

Well in that case, one brand of such glasses is Reflectacles 😎

Reflectacles

Cool! Looks like they also have a discount for Monero purchases. I might have to pick one up.

I wish they would make a line available for cheap so a charity could hand them out for free, ~$150 is a bit much.

Very neat, I wonder how effective they are at confusing facial recognition and 3D facial scanning systems? Not that we often encounter the 3D scanning, but an interesting aspect to consider.

I just wonder if I’d get in trouble for wearing them at the airport. That’s the most likely place for 3D facial scanning.

But AFAIK, most facial recognition systems use IR, whether they’re 2D or 3D, so I would guess they’d be pretty effective.

Thanks for the informative and thought out reply.

Also, thanks for killing my joke business idea by mentioning a brand that makes these.

I would like to see them in the rim of a cap too. As I always have a cap in my head.

Edit: Hot damn they’re expensive. Business idea is back.

Ultraviolet would be a terrible idea as UV-A and UV-B can cause skin cancer over prolonged exposure and UV-C will straight up burn your eyes and skin.

They fixed it, the new implementation requires a DNA sample now.