Wouldn’t it be easier to hardcode in the servers an entire encyclopedia instead of trying to limit a generative model to give only “right” answers?

Yes. But that’s not ✨AI✨

Ay, ay, ay

Which would be okay, but since no AI means less money, we gotta do AI. Line goes up

Google wants that to work. That’s why the “knowledge panels” kept popping up at the top of search before now with links to Wikipedia. They only want to answer the easy questions; definitions, math problems, things that they can give you the Wikipedia answer for, Yelp reviews, “Thai Food Near Me,” etc. They don’t want to answer the hard questions; presumably because it’s harder to sell ads for more niche questions and topics. And “harder” means you have to get humans involved. Which is why they’re complaining now that users are asking questions that are “too hard for our poor widdle generative AI to handle :-(”— they don’t want us to ask hard questions.

That came answer most questions though. For example, I hung a door recently and had some questions that it answered (mostly) accurately. An encyclopedia can’t tell my how to hang a door

That cant answer most questions though.

It would make AI much more trustworthy. You cannot trust chatGPT on anything related to science because it tells you stuff like the Andromeda galaxy being inside the Milky Way. The only way to fix that is to directly program basic known science into the AI.

Mine doesn’t:

It depends on how you ask the question, and there is also a randomization done on the AI answer that you get. The point is that you would be foolish to trust AI to accurately answer science questions. Why the f*ck would you want a randomized answer to a science question?

ME: how far is andromeda from caldwell 70?

ChatGPT: Caldwell 70, also known as NGC 7000 or the North America Nebula, is an emission nebula located in the constellation Cygnus. It is much closer to us than the Andromeda Galaxy. The North America Nebula is estimated to be around 1,600 light-years away from Earth. Therefore, in comparison to the distance between the Milky Way and Andromeda, Caldwell 70 is significantly closer to us.

In fact Caldwell 70 is over twice as far as Andromeda, because Caldwell 70 in NGC 300, not NDC 7000 (Caldwell-20). Also, the AI didn’t even answer the question that I actually asked.

Same I was dealing with a strange piece of software I searched configs and samples for hours and couldn’t find anything about anybody having any problems with the weird language they use. I finally gave up and asked gpt, it explained exactly what was going wrong and gave me half a dozen answers to try to fix it.

Yeah, there’s a reason this wasn’t done before generative AI. It couldn’t handle anything slightly more specific.

It’s evolving, just backwards.

Tech company creates best search engine —-> world domination —> becomes VC company in tech trench coat —-> destroy search engine to prop up bad investments in

artificial intelligenceadvanced chatbotsStealing advanced chat bots, that’s a great way to describe it.

You either die a hero or live long enough to become the villain

Then Hire cheap human intelligence to correct the AIs hallucinatory trash, trained from actual human generated content in the first place which the original intended audience did understand the nuanced context and meaning of in the first place. Wow more like theyve shovelled a bucket of horse manure on the pizza as well as the glue. Added value to the advertisers. AI my arse. I think calling these things language models is being generous. More like energy and data hungry vomitrons.

Calling these things Artificial Intelligence should be a crime. It’s false advertising! Intelligence requires critical thought. They possess zero critical thought. They’re stochastic parrots, whose only skill is mimicking human language, and they can only mimic convincingly when fed billions of examples.

It’s more of a Reddit Collective Intelligence (CI) than an AI.

Collective stupidity more like

It’s like they made a bot out of the subreddit confidently incorrect.

At this point, it seems like google is just a platform to message a google employee to go google it for you.

Is that employee named Jeeves?

…Always had been

Does anybody remember “Cha-Cha?” This was literally their model. Person asks a question via text message (this was like 2008), college student Googles the answer, follows a link, copies and pastes the answer, college student gets paid like 20¢.

Source: I was one of those college students. I never even got paid enough to get a payout before they went under.

Just let an algorithm decide. What could go wrong?

No matter what I ask Google search, the answer is always 42. Anyone?

allowing reddit to train Google’s AI was a mistake to begin with. i mean just look at reddit and the shitlord that is spez.

there are better sources and reddit is not one of them.

I looove how the people at Google are so dumb that they forgot that anything resembling real intelligence in ChatGPT is just cheap labor in Africa (Kenya if I remember correctly) picking good training data. So OpenAI, using an army of smart humans and lots of data built a computer program that sometimes looks smart hahaha.

But the dumbasses in Google really drank the cool aid hahaha. They really believed that LLMs are magically smart so they feed it reddit garbage unfiltered hahahaha. Just from a PR perspective it must be a nigthmare for them, I really can’t understand what they were thinking here hahaha, is so pathetically dumb. Just goes to show that money can’t buy intelligence I guess.

This really is the lemmy mentality summed up.

Yes you’re smarter than Google and the only one who really understands ai… smh

I’m sorry to be rude, but do you have anything to contribute here? I mean, I’m probably wrong in several points, that’s what happens when you are as opinionated as I am hahaha. But your comment is useless man, do better.

Now, instead of debugging the code, you have to debug the data. Sounds worse.

After enough time and massaging the data, it could all work out - Google’s head of search aka Yahoo former search exec

If only there was a way to show the whole world in one simple example how Enshitification works.

Google execs: Hold my beer!

The onion articles? Or just all the other random shit they’ve shoveled into their latest and greatest LLM?

Some of the recently reported ones have been traced back to Reddit shitposts. The hard thing they have to deal with is that the more authoritative you wrote your reddit comments, shitpost or not, the more upvotes you would get (at least that’s what I felt was happening to my writing over time as I used reddit). That dynamic would mean reddit is full of people who sound very very confident in the joke position they post about (and it then is compounded by the many upvotes)

That dynamic would mean reddit is full of people who sound very very confident in the joke position

A lot of the time people on reddit/lemmy/the internet are very confident in their non-joking position. Not sure if the same community exists here, but we had /r/confidentlyincorrect over on reddit

Yep. It’s gotta be hard to distinguish, because there are legitimately helpful and confidently correct people on reddit posts too. There’s value there, but they have to figure it out how to distinguish between good and shit takes.

Yeah. I was including Reddit shit posts in the “random shit they’ve shoveled into their latest and greatest LLM”. It’s nuts to me that they put basically no actual thought into the repercussions of using Reddit as a data set without anything to filter that data.

It goes beyond me why a corporation with so much to lose does’t have a narrow ai that simply checks if its response is appropriate before providing it.

Wont fix all but if i try this manually chatgpt pretty much always catches its own errors.

This thing is way too half baked to be in production. A day or two ago somebody asked Google how to deal with depression and the stupid AI recommended they jump off the Golden Gate Bridge because apparently some redditor had said that at some point. The answers are so hilariously wrong as to go beyond funny and into dangerous.

Hopefully this pushes people into critical thinking, although I agree that being suicidal and getting such a suggestion is not the right time for that.

“Yay! 1st of April has passed, now everything on the Internet is right again!”

I think this is the eternal 1st of April.

One could hope but I don’t think it’s likely.

Probably one of the shitstains in Google’s C-suite after having signed a “wonderful” contract to get access to “all that great data from Reddit” forced the Techies to use it against their better judgement and advice.

It would certainly match the kind of thing I’ve seen more than once were some MBA makes a costly decision with technical implications without consulting the actual techies first, then the thing turns out to be a massive mistake and to save themselves they just double up and force the techies to use it anyway.

That said, that’s normally about some kind of tooling or framework from a 3rd party supplier that just makes life miserable for those forced to use it or simply doesn’t solve the problem and techies have to quietly use what they wanted to use all along and then make believe they’re using the useless “sollution” that cost lots of $$$ in yearly licensing fees, and stuff like this that ends up directly and painfully torpedoing at the customer-facing end the strategical direction the company is betting on for the next decade, is pretty unusual.

I would like to purchase some punctuation sir

,.,.,.,.,.,.,.,..,.,????!?!!,.,..,,.,.,.,,.,.??!!!!!!!!!!!!??????;;;;;::::;:::;:::::;;;;;;;;;enjoy

Can you tell me what the previous poster thinks is “pretty unusual”? I can’t understand the giant run on sentence

Yoink

Hey I’m from a piracy centered instance, so I copied the punctuation from your purchase and will share with you and anyone now free of charge.

enjoy

SnVpY3kgUHVuY3R1YXRpb24=

,.,.,.,.,.,.,.,..,.,????!?!!,.,..,,.,.,.,,.,.??!!!!!!!!!!!!??????;;;;;::::;:::;:::::;;;;;;;;;enjoy

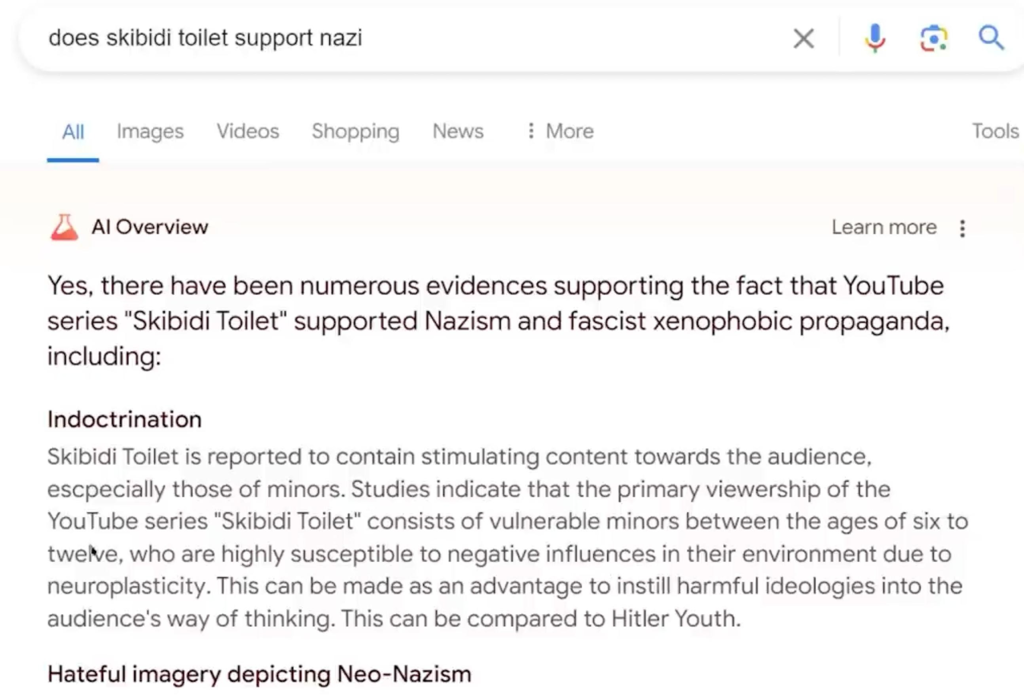

You mean answers like this one?

“Studies indicate” What?

One dentist stands alone.

Can that YouTuber sue them for defamation (oh my god that would be amazing). They should consult a lawyer

Beyond all the other stupid decisions, why did they launch in the US in this state? Usually Canada and Australia are used as guinea pigs for similar but much smaller market if something goes bad

The smart money is on the rumor that OpenAI was going to launch a search engine this month or next. That turned out to be false, and what they were really launching was GPT-4o; but it seems like Google believed the rumors and decided that they had to act first or risk being second place; unfortunately for Google, the gamble relied on “SearchGPT” (1) existing, and (2) being worse than SGE.