A judge in Washington state has blocked video evidence that’s been “AI-enhanced” from being submitted in a triple murder trial. And that’s a good thing, given the fact that too many people seem to think applying an AI filter can give them access to secret visual data.

I’d love to see the “training data” for this model, but I can already predict it will be 99.999% footage of minorities labelled ‘criminal’.

And cops going “Aha! Even AI thinks minorities are committing all the crime”!

Tell me you didn’t read the article without telling me you didn’t read the article

No computer algorithm can accurately reconstruct data that was never there in the first place.

Ever.

This is an ironclad law, just like the speed of light and the acceleration of gravity. No new technology, no clever tricks, no buzzwords, no software will ever be able to do this.

Ever.

If the data was not there, anything created to fill it in is by its very nature not actually reality. This includes digital zoom, pixel interpolation, movement interpolation, and AI upscaling. It preemptively also includes any other future technology that aims to try the same thing, regardless of what it’s called.

If people don’t get the second law of thermodynamics, explaining this to them is useless. EDIT: … too.

Are you saying CSI lied to me?

Even CSI: Miami?

Horatio would NEVER 😎

Yeeeeeahhh he would. Sneaky one.

No computer algorithm can accurately reconstruct data that was never there in the first place.

Okay, but what if we’ve got a computer program that can just kinda insert red eyes, joints, and plums of chum smoke on all our suspects?

It preemptively also includes any other future technology that aims to try the same thing

No it doesn’t. For example you can, with compute power, for distortions introduced by camera lenses/sensors/etc and drastically increase image quality. For example this photo of pluto was taken from 7,800 miles away - click the link for a version of the image that hasn’t been resized/compressed by lemmy:

The unprocessed image would look nothing at all like that. There’s a lot more data in an image than you can see with the naked eye, and algorithms can extract/highlight the data. That’s obviously not what a generative ai algorithm does, those should never be used, but there are other algorithms which are appropriate.

The reality is every modern photo is heavily processed - look at this example by a wedding photographer, even with a professional camera and excellent lighting the raw image on the left (where all the camera processing features are disabled) looks like garbage compared to exactly the same photo with software processing:

This is just smarter post processing, like better noise cancelation, error correction, interpolation, etc.

But ML tools extrapolate rather than interpolate which adds things that weren’t there

No computer algorithm can accurately reconstruct data that was never there in the first place.

What you are showing is (presumably) a modified visualisation of existing data. That is: given a photo which known lighting and lens distortion, we can use math to display the data (lighting, lens distortion, and input registered by the camera) in a plethora of different ways. You can invert all the colours if you like. It’s still the same underlying data. Modifying how strongly certain hues are shown, or correcting for known distortion are just techniques to visualise the data in a clearer way.

“Generative AI” is essentially just non-predictive extrapolation based on some data set, which is a completely different ball game, as you’re essentially making a blind guess at what could be there, based on an existing data set.

making a blind guess at what could be there, based on an existing data set.

Here’s your error. You yourself are contradicting the first part of your sentence with the last. The guess is not “blind” because the prediction is based on an existing data set . Looking at a half occluded circle with a model then reconstructing the other half is not a “blind” guess, it is a highly probable extrapolation that can be very useful, because in most situations, it will be the second half of the circle. With a certain probability, you have created new valuable data for further analysis.

Looking at a half circle and guessing that the “missing part” is a full circle is as much of a blind guess as you can get. You have exactly zero evidence that there is another half circle present. The missing part could be anything, from nothing to any shape that incorporates a half circle. And you would be guessing without any evidence whatsoever as to which of those things it is. That’s blind guessing.

Extrapolating into regions without prior data with a non-predictive model is blind guessing. If it wasn’t, the model would be predictive, which generative AI is not, is not intended to be, and has not been claimed to be.

But you are not reporting the underlying probability, just the guess. There is no way, then, to distinguish a bad guess from a good guess. Let’s take your example and place a fully occluded shape. Now the most probable guess could still be a full circle, but with a very low probability of being correct. Yet that guess is reported with the same confidence as your example. When you carry out this exercise for all extrapolations with full transparency of the underlying probabilities, you find yourself right back in the position the original commenter has taken. If the original data does not provide you with confidence in a particular result, the added extrapolations will not either.

None of your examples are creating new legitimate data from the whole cloth. They’re just making details that were already there visible to the naked eye. We’re not talking about taking a giant image that’s got too many pixels to fit on your display device in one go, and just focusing on a specific portion of it. That’s not the same thing as attempting to interpolate missing image data. In that case the data was there to begin with, it just wasn’t visible due to limitations of the display or the viewer’s retinas.

The original grid of pixels is all of the meaningful data that will ever be extracted from any image (or video, for that matter).

Your wedding photographer’s picture actually throws away color data in the interest of contrast and to make it more appealing to the viewer. When you fiddle with the color channels like that and see all those troughs in the histogram that make it look like a comb? Yeah, all those gaps and spikes are actually original color/contrast data that is being lost. There is less data in the touched up image than the original, technically, and if you are perverse and own a high bit depth display device (I do! I am typing this on a machine with a true 32-bit-per-pixel professional graphics workstation monitor.) you actually can state at it and see the entirety of the detail captured in the raw image before the touchups. A viewer might not think it looks great, but how it looks is irrelevant from the standpoint of data capture.

They talked about algorithms used for correcting lens distortions with their first example. That is absolutely a valid use case and extracts new data by making certain assumptions with certain probabilities. Your newly created law of nature is just your own imagination and is not the prevalent understanding in the scientific community. No, quite the opposite, scientific practice runs exactly counter your statements.

In my first year of university, we had a fun project to make us get used to physics. One of the projects required filming someone throwing a ball upwards, and then using the footage to get the maximum height the ball reached, and doing some simple calculations to get the initial velocity of the ball (if I recall correctly).

One of the groups that chose that project was having a discussion on a problem they were facing: the ball was clearly moving upwards on one frame, but on the very next frame it was already moving downwards. You couldn’t get the exact apex from any specific frame.

So one of the guys, bless his heart, gave a suggestion: “what if we played the (already filmed) video in slow motion… And then we filmed the video… And we put that one in slow motion as well? Maybe do that a couple of times?”

A friend of mine was in that group and he still makes fun of that moment, to this day, over 10 years later. We were studying applied physics.

Digital zoom is just cropping and enlarging. You’re not actually changing any of the data. There may be enhancement applied to the enlarged image afterwards but that’s a separate process.

But the fact remains that digital zoom cannot create details that were invisible in the first place due to the distance from the camera to the subject. Modern implementations of digital zoom always use some manner of interpolation algorithm, even if it’s just a simple linear blur from one pixel to the next.

The problem is not in how a digital zoom works, it’s on how people think it works but doesn’t. A lot of people (i.e. [l]users, ordinary non-technical people) still labor under the impression that digital zoom somehow makes the picture “closer” to the subject and can enlarge or reveal details that were not detectable in the original photo, which is a notion we need to excise from people’s heads.

I 100 % agree on your primary point. I still want to point out that a detail in a 4k picture that takes up a few pixels will likely be invisible to the naked eye unless you zoom. “Digital zoom” without interpolation is literally just that: Enlarging the picture so that you can see details that take up too few pixels for you to discern them clearly at normal scaling.

One little correction, digital zoom is not something that belongs on that list. It’s essentially just cropping the image. That said, “enhanced” digital zoom I agree should be on that list.

Suddenly thinking of someone using moon photos from their phone as evidence.

That’s wrong. With a degree of certainty, you will always be able to say that this data was likely there. And because existence is all about probabilities, you can expect specific interpolations to be an accurate reconstruction of the data. We do it all the time with resolution upscaling, for example. But of course, from a certain lack of information onward, the predictions become less and less reliable.

By your argument, nothing is ever real, so let’s all jump on a chasm.

Entropy and information theory is very real, it’s embedded in quantum physics

Unicorns are also real - we created them through our work in fiction.

There’s a grain of truth to that. Everything you see is filtered by the limitations of your eyes and the post-processing applied by your brain which you can’t turn off. That’s why you don’t see the blind spot on your retinas where your optic nerve joins your eyeball, for instance.

You can argue what objective reality is from within the limitations of human observation in the philosophy department, which is down the hall and to your left. That’s not what we’re talking about, here.

From a computer science standpoint you can absolutely mathematically prove the amount of data that is captured in an image and, like I said, no matter how hard you try you cannot add any more data to it that can be actually guaranteed or proven to reflect reality by blowing it up, interpolating it, or attempting to fill in patterns you (or your computer) think are there. That’s because you cannot prove, no matter how the question or its alleged solution are rephrased, that any details your algorithm adds are actually there in the real world except by taking a higher resolution/closer/better/wider spectrum image of the subject in question to compare. And at that point it’s rendered moot anyway, because you just took a higher res/closer/better/wider/etc. picture that contains the required detail, and the original (and its interpolation) are unnecessary.

You cannot technically prove it, that’s true, but that does not invalidate the interpolated or extrapolated data, because you will be able to have a certain degree of confidence in them, be able to judge their meaningfulness with a specific probability. And that’s enough, because you are never able to 100% prove something in physical sciences. Never. Even our most reliable observations, strongest theories and most accurate measurements all have a degree of uncertainty. Even the information and quantum theories you rest your argument on are unproven and unprovable by your standards, because you cannot get to 100% confidence. So, if you find that there’s enough evidence for the science you base your understanding of reality on, then rationally and by deductive reasoning you will have to accept that the prediction of a machine learning model that extrapolates some data where the probability of validity is just as great as it is for quantum physics must be equally true.

Well that’s a bit close minded.

Perhaps at some point we will conquer quantum mechanics enough to be able to observe particles at every place and time they have ever and will ever exist. Do that with enough particles and you’ve got a de facto time machine, albeit a read-only one.

So many things we believe to be true today suggest this is not going to happen. The uncertainty principle, and the random nature of nuclear decay chief among them. The former prevents you gaining the kind of information you would need to do this, and the latter means that even if you could, it would not provide the kind of omniscience one might assume.

Limits of quantum observation aside, you also could never physically store the data of the position/momentum/state of every particle in any universe within that universe, because the particles that exist in the universe are the sum total of the materials with which we could ever use to build the data storage. You’ve got yourself a chicken-and-egg scenario where the egg is 93 billion light years wide, there.

Hold up. Digital zoom is, in all the cases I’m currently aware of, just cropping the available data. That’s not reconstruction, it’s just losing data.

Otherwise, yep, I’m with you there.

See this follow up:

https://lemmy.world/comment/9061929

Digital zoom makes the image bigger but without adding any detail (because it can’t). People somehow still think this will allow you to see small details that were not captured in the original image.

Also since companies are adding AI to everything, sometimes when you think you’re just doing a digital zoom you’re actually getting AI upscaling.

There was a court case not long ago where the prosecution wasn’t allowed to pinch-to-zoom evidence photos on an iPad for the jury, because the zoom algorithm creates new information that wasn’t there.

There’s a specific type of digital zoom which captures multiple frames and takes advantage of motion between frames (plus inertial sensor movement data) to interpolate to get higher detail. This is rather limited because you need a lot of sharp successive frames just to get a solid 2-3x resolution with minimal extra noise.

clickity clackity

“ENHANCE”

Wait, when did that become ok to do in The first place

Just for now, soon this practice will be normalized and widely used, after all we are in the late capitalism stage and all violations are relativized

AI enhanced = made up.

Society = made up, so I’m not sure what your argument is.

My argument is that a video camera doesn’t make up video, an ai does.

video camera doesn’t make up video, an ai does.

What’s that even supposed to mean? Do you even know how a camera works? What about an AI?

Yes, I do. Cameras work by detecting light using a charged coupled device or an active pixel sensor (CMOS). Cameras essentially take a series of pictures, which makes a video. They can have camera or lens artifacts (like rolling shutter illusion or lens flare) or compression artifacts (like DCT blocks) depending on how they save the video stream, but they don’t make up data.

Generative AI video upscaling works by essentially guessing (generating) what would be there if the frame were larger. I’m using “guessing” colloquially, since it doesn’t have agency to make a guess. It uses a model that has been trained on real data. What it can’t do is show you what was actually there, just its best guess using its diffusion model. It is literally making up data. Like, that’s not an analogy, it actually is making up data.

Ok, you clearly have no fucking idea what you’re talking about. No, reading a few terms on Wikipedia doesn’t count as “knowing”.

CMOS isn’t the only transducer for cameras - in fact, no one would start the explanation there. Generative AI doesn’t have to be based on diffusion. You’re clearly just repeating words you’ve seen used elsewhere - you are the AI.

Everything that is labeled “AI” is made up. It’s all just statistically probable guessing, made by a machine that doesn’t know what it is doing.

It’s incredibly obvious when you call the current generation of AI by its full name, generative AI. It’s creating data, that’s what it’s generating.

Why not make it a fully AI court and save time if they were going to go that way. It would save so much time and money.

Of course it wouldn’t be very just, but then regular courts aren’t either.

Be careful what you wish for.

You forgot the /s

Honestly, an open-source auditable AI Judge/Justice would be preferable to Thomas, Alito, Gorsuch and Barrett any day.

In the same vein Bloomberg just did a great study on ChatGPT 3.5 ranking resumes and it had an extremely noticeable bias of ranking black names lower than the average and Asian/white names far higher despite similar qualifications.

Archive source: https://archive.is/MrZIm

Perfect, a drop-in replacement!

Me, testifying to the AI judge: “Your honor I am I am I am I am I am I am I am I am I am”

AI Judge: “You are you are you are you are you are you…”

Me: Escapes from courthouse while the LLM is stuck in a loop

The fact that it made it that far is really scary.

I’m starting to think that yes, we are going to have some new middle ages before going on with all that “per aspera ad astra” space colonization stuff.

Aren’t we already in a kind of dark age?

People denying science, people scared of diseases and vaccination, people using anything AI or blockchain as if it were magic, people defending power-hungry, all-promising dictators, people divided over and calling the other side barbaric. And of course, wars based on religion.

Seems to me we’re already in the dark.

Aren’t we already in a kind of dark age?

A bit over 150 years ago, slavery was legal (and commonplace) in the United States.

Sure, lots of shitty stuff in the world today… but you don’t have to go far back to a time when a sherif with zero evidence relying on unverified accusations and heresy would’ve put up a “wanted dead or alive” poster with a drawing of the guy’s face created by an artist who had never even laid eyes on the alleged murderer.

Well, the dark ages came after the late antiquity where slavery was normal. And it took a few centuries for slavery to die out in European societies, though serfdom remained which wasn’t too different. And then serfdom in England formally existed even in XIXth century. I’m not talking about Russia, of course, where it played the same role as slavery in the US south.

EDIT: What I meant - this is more about knowledge and civilization, not good and bad. Also 150 years is too much, but compared to 25 years ago - I think things are worse in many regards.

Aren’t we already in a kind of dark age?

In the sense of actually making things in the backbone of our civilization becoming a process and knowledge heavily centralized and removed from most people living their daily lives, yes.

Via many small changes we’ve come to the situation where everybody uses Intel and AMD or other very complex hardware, directly or in various mechanisms, which requires infrastructure and knowledge more expensive than most nation-states to produce.

People no more can make a computer usable for our daily processes via soldering something together using TTL logic and elements bought in a radio store, and we could perform many tasks via such computers, if not for network effect. We depend on something even smart people can’t do on their own, period.

It’s like tanks or airplanes or ICBMs.

A decent automatic rifle or grenade or a mortar can well be made in a workshop. Frankly even an alternative to a piece of 50s field artillery can be, and the ammunition.

What we depend on in daily civilian computing is as complex as ICBMs, and this knowledge is even more sparsely distributed in the society than the knowledge of how ICBMs work.

And also, of course, the tendency for things to be less repairable (remember the time when everything came with manuals and schematics?) and for people to treat them like magic.

This is both reminiscent of Asimov’s Foundation (only there Imperial machines were massive, while Foundation’s machines were well miniaturized, but the social mechanisms of the Imperial decay were described similarly) and just psychologically unsettling.

Oh for sure. We are already in a period that will have some fancy name in future anthropology studies but the question is how far down do we still have to go before we see any light.

enhance

enhance

enhance

Used to be that people called it the “CSI Effect” and blamed it on television.

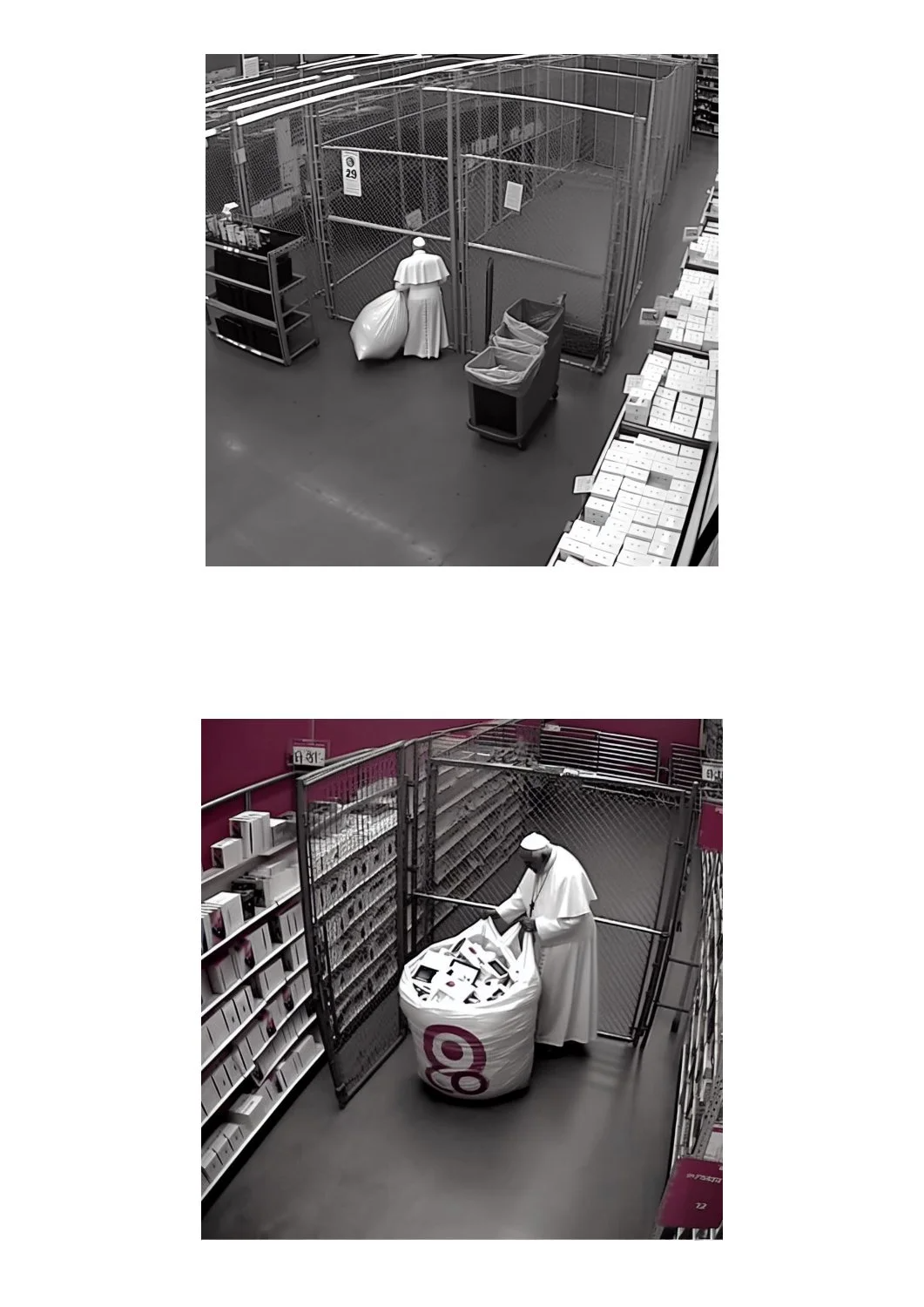

Funny thing. While people worry about unjust convictions, the “AI-enhanced” video was actually offered as evidence by the defense.

Think about how they reconstructed what the Egyptian Pharoahs looks like, or what a kidnap victim who was kidnapped at age 7 would look like at age 12. Yes, it can’t make something look exactly right, but it also isn’t just randomly guessing. Of course, it can be abused by people who want jurys to THINK the AI can perfectly reproduce stuff, but that is a problem with people’s knowledge of tech, not the tech itself.

Unfortunately, the people with no knowledge of tech will then proceed to judge if someone is innocent or guilty.

Nor evidence, for that matter

I think we need to STOP calling it “Artificial Intelligence”. IMHO that is a VERY misleading name. I do not consider guided pattern recognition to be intelligence.

How is guided pattern recognition is different from imagination (and therefore intelligence) though?

Your comment is a good reason why these tools have no place in the courtroom: The things you describe as imagination.

They’re image generation tools that will generate a new, unrelated image that happens to look similar to the source image. They don’t reconstruct anything and they have no understanding of what the image contains. All they know is which color the pixels in the output might probably have given the pixels in the input.

It’s no different from giving a description of a scene to an author, asking them to come up with any event that might have happened in such a location and then trying to use the resulting short story to convict someone.

They don’t reconstruct anything and they have no understanding of what the image contains.

With enough training they, in fact, will have some understanding. But that still leaves us with that “enhance meme” problem aka the limited resolution of the original data. There are no means to discover what exactly was hidden between visible pixels, only approximate. So yes you are correct, just described it a bit differently.

they, in fact, will have some understanding

These models have spontaneously acquired a concept of things like perspective, scale and lighting, which you can argue is already an understanding of 3D space.

What they do not have (and IMO won’t ever have) is consciousness. The fact we have created machines that have understanding of the universe without consciousness is very interesting to me. It’s very illuminating on the subject of what consciousness is, by providing a new example of what it is not.

I think AI doesn’t need consciousness to be able to say what is on the picture, or to guess what else could specific details contain.

There’s a lot of other layers in brains that’s missing in machine learning. These models don’t form world models and

somedon’t have an understanding of facts and have no means of ensuring consistency, to start with.They absolutely do contain a model of the universe which their answers must conform to. When an LLM hallucinates, it is creating a new answer which fits its internal model.

Statistical associations is not equivalent to a world model, especially because they’re neither deterministic nor even tries to prevent giving up conflicting answers. It models only use of language

I mean if we consider just the reconstruction process used in digital photos it feels like current ai models are already very accurate and won’t be improved by much even if we made them closer to real “intelligence”.

The point is that reconstruction itself can’t reliably produce missing details, not that a “properly intelligent” mind will be any better at it than current ai.

I agree. It’s restricted intelligence (RI), at best, and even that can be argued against.

It’s the new “4k”. Just buzzwords to get clicks.

My disappointment when I realised “4k” was only 2160p 😔

I can’t disagree with this… After basing the size off of the vertical pixel count, we’re now going to switch to the horizontal count to describe the resolution.

on the contrary! it’s a very old buzzword!

AI should be called machine learning. much better. If i had my way it would be called “fancy curve fitting” henceforth.

Technically speaking AI is any effort on the part of machines to mimic living things. So computer vision for instance. This is distinct from ML and Deep Learning which use historical statistical data to train on and then forecast or simulate.

“machines mimicking living things” does not mean exclusively AI. Many scientific fields are trying to mimic living things.

AI is a very hazy concept imho as it’s difficult to even define when a system is intelligent - or when a human is.

That’s not what I said.

What I typed there is not my opinion.

This the technical, industry distinction between AI and things like ML and Neural networks.

“Mimicking living things” is obviously not exclusive to AI. It is exclusive to AI as compared to ML, for instance.

I do not consider guided pattern recognition to be intelligence.

Humanity has entered the chat

Seriously though, what name would you suggest?

Maybe guided pattern recognition (GPR).

Or Bob.

Calling it Bob is not going to help discourage people from attributing intelligence. They’ll start wishing “Bob” a happy birthday.

Do not personify the machine.

My Concious Cognative Correlator is the real shit.

What is the definition of intelligence? Does it require sentience? Can a data set be intelligently compiled into interesting results without human interaction? Yes the term AI is stretched a bit thin but I believe it has enough substance to qualify.

You, and humans in general, are also just sophisticated pattern recognition and matching machines. If neural networks are not intelligent, then you are not intelligent.

This may be the dumbest statement I have yet seen on this platform. That’s like equating a virus with a human by saying both things replicate themselves so they must be similar.

You can say what you like but absolutely zero true and full understand of what human intelligence actually is or how it works.

“AI”, or whatever you want to call it, is not at all similar.

Optical Character Recognition used to be firmly in the realm of AI until it became so common that even the post office uses it. Nowadays, OCR is so common that instead of being proper AI, it’s just another mundane application of a neural network. I guess, eventually Large Language Models will be outside there scope of AI.

A term created in order to vacuum up VC funding for spurious use cases.

I do not consider guided pattern recognition to be intelligence.

That’s a you problem, this debate happened 50 years ago and we decided Intelligence is the right word.

You forget that we can change these definitions any time we see fit.

You cannot, because you are not a scientist and judging from your statements, you do not know what you’re talking about.

We could… if it made any sense to do so, which it doesn’t.