Funny she didn’t talked it out with lawyers before that. That’s a bad way to answer that.

Or she talked and the lawyers told her to pretend ignorance.

It probably means that they don’t scrape and preprocess training data in house. She knows they get it from a garden variety of underpaid contractors, but she doesn’t know the specific data sources beyond the stipulations of the contract (“publicly available or licensed”), and she probably doesn’t even know that for certain.

Maybe, but it sounds very weak.

Lawyers aren’t PR people.

I hope this is gonna become a new meme template

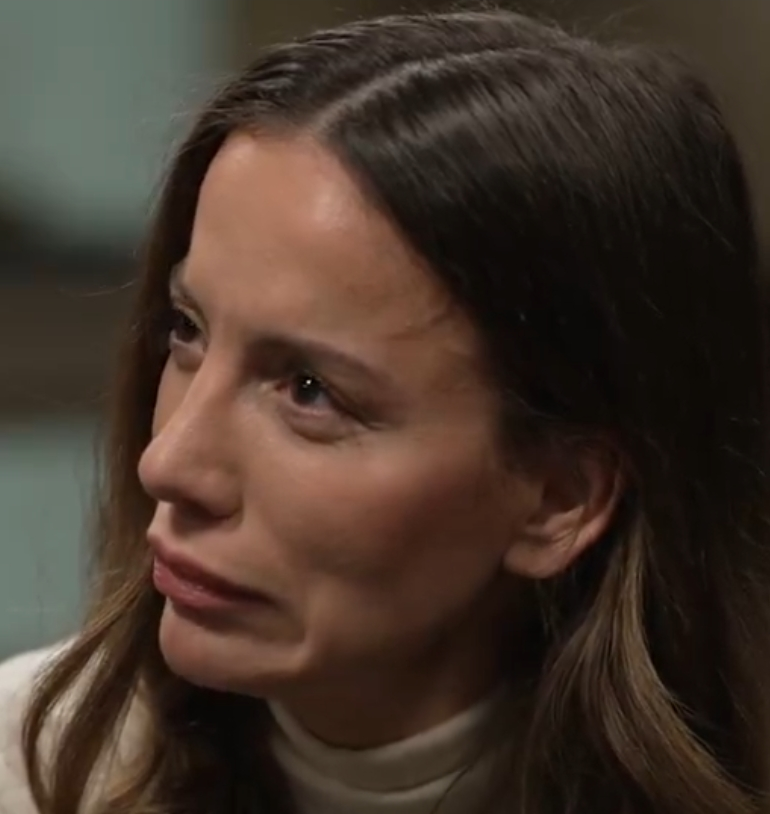

She looks like she just talked to the waitress about a fake rule in eating nachos and got caught up by her date.

this is incomprehensible to me. can you try it with two or three sentences?

Her date was eating all the fully loaded nachos, so she went up and ask to the waitress to make up a rule about how one person cannot eat all the nacho with meat and cheese. But her date knew that rule was bullshit and called her out about it. She’s trying to look confused and sad because they’re going to be too soon for the movie.

thank you. it must be a reference to something, but i don’t watch tv any more.

I think you should leave…

(is what you would search to find this)

What?! What the hell are you talking about?!

Chatgpt, you okay? 😅

Not sure what’s funnier. your first comment or the comment explaining it to someone who obviously not part of a turbo team

Turbo team?? Did you replace my toilet with one that looks the same but has a joke hole? That’s just FOR FARTS??

This tellls you so much what kind of company OpenAI is

This is the best summary I could come up with:

Mira Murati, OpenAI’s longtime chief technology officer, sat down with The Wall Street Journal’s Joanna Stern this week to discuss Sora, the company’s forthcoming video-generating AI.

It’s a bad look all around for OpenAI, which has drawn wide controversy — not to mention multiple copyright lawsuits, including one from The New York Times — for its data-scraping practices.

After the interview, Murati reportedly confirmed to the WSJ that Shutterstock videos were indeed included in Sora’s training set.

But when you consider the vastness of video content across the web, any clips available to OpenAI through Shutterstock are likely only a small drop in the Sora training data pond.

Others, meanwhile, jumped to Murati’s defense, arguing that if you’ve ever published anything to the internet, you should be perfectly fine with AI companies gobbling it up.

Whether Murati was keeping things close to the vest to avoid more copyright litigation or simply just didn’t know the answer, people have good reason to wonder where AI data — be it “publicly available and licensed” or not — is coming from.

The original article contains 667 words, the summary contains 178 words. Saved 73%. I’m a bot and I’m open source!

Watching a video or reading an article by a human isn’t copyright infringement, why then if an “AI” do it then it is? I believe the copyright infringement it’s made by the prompt so by the user not the tool.

This is what people fundamentally don’t understand about intelligence, artificial or otherwise. People feel like their intelligence is 100% “theirs”. While I certainly would advocate that a person owns their intelligence, It didn’t spawn from nothing.

You’re standing on the shoulders of everyone that came before you. You take a prehistoric man or an alien that hasn’t had any of the same experiences you’ve had, they won’t be able to function in this world. It’s not because they are any dumber than you. It’s because you absorbed the hive mind of the society you live in. Everyone’s racing to slap their brand on stuff to copyright it to get ahead and carve out their space.

“No you can’t tell that story, It’s mine.” “That art is so derivative.”

But copyright was only meant to protect something for a short period in order to monetize it; to adapt the value of knowledge for our capital market. Our world can’t grow if all knowledge is owned forever and isn’t able to be used when even THINKING about new ideas.

ANY VERSION OF INTELLIGENCE YOU WOULD WANT TO INTERACT WITH MUST CONSUME OUR KNOWLEDGE AND PRODUCE TRANSFORMATIONS OF IT.

That’s all you do.

Imagine how useless someone would be who’d never interacted with anything copyrighted, patented, or trademarked.

That’s not a very agreeable take. Just get rid of patents and copyrights altogether and your point dissolves itself into nothing. The core difference being derivative works by humans can respect the right to privacy of original creators.

Deep learning bullshit software however will just regurgitate creator’s contents, sometimes unrecognizable, but sometimes outright steal their likeness or individual style to create content that may be associated with the original creators.

what you are in effect doing, is likening learning from the ideas of others to a deep learning “AI” using images for creating revenge porn, to give a drastic example.

When a school professor “prompts” you to write an essay and you, the “tool” go consume copyrighted material and plagiarize it in the production of your essay is the infringement made by the professor?

If you quote the sources and write it with your own words I believe it isn’t, AFAIK “AI” already do that.

Copilot lists its sources. The problem is half of them are completely made up and if you click on the links they take you to the wrong pages

It definitely does not cite sources and use it’s own words in all cases - especially in visual media generation.

And in the proposed scenario I did write the student plagiarizes the copyrighted material.

If you read a book or watch a movie and get inspired by it to create something new and different, it’s plagiarism and copyright infringement?

If that were the case the majority of stuff nowadays it’s plagiarism and copyright infringement, I mean generally people get inspired by someone or something.

There’s a long history of this and you might find some helpful information in looking at “transformative use” of copyrighted materials. Google Books is a famous case where the technology company won the lawsuit.

The real problem is that LLMs constantly spit out copyrighted material verbatim. That’s not transformative. And it’s a near-impossible problem to solve while maintaining the utility. Because these things aren’t actually AI, they’re just monstrous statistical correlation databases generated from an enormous data set.

Much of the utility from them will become targeted applications where the training comes from public/owned datasets. I don’t think the copyright case is going to end well for these companies…or at least they’re going to have to gradually chisel away parts of their training data, which will have an outsized impact as more and more AI generated material finds its way into the training data sets.

How constantly does it spit out copyrighted material? Is there data on that?

You do realize that AI is just a marketing term, right? None of these models learn, have intelligence or create truly original work. As a matter of fact, if people don’t continue to create original content, these models would stagnate or enter a feedback loop that would poison themselves with their own erroneous responses.

AIs don’t think. They copy with extra steps.

So your question is “is plagiarism plagiarism”?

No, that is not the question nor a reasonable interpretation of it.

If you read an article, then copy parts of that article into a new article, that’s copyright infringement. Same with ais.

Depends on how much is copied, if it’s a small amount it’s fair use.

Fair use is a four factor test amount used is a factor but a low amount being used doesn’t strictly mean something is fair use. You could use a single frame of a movie and have it not qualify as fair use.

Fair use depends on a lot, and just being a small amount doesn’t factor in. It’s the actual use. Small amounts just often fly under the nose of legal teams.

What does this human is going to do with this reading ? Are they going to produce something by using part of this book or this article ?

If yes, that’s copyright infringement.

They know what they fed the thing. Not backing up their own training data would be insane. They are not insane, just thieves

I almost want to believe they legitimately do not know nor care they‘re committing a gigantic data and labour heist but the truth is they know exactly what they‘re doing and they rub it under our noses.

Of course they know what they’re doing. Everybody knows this, how could they be the only ones that don’t?

There is no way in hell it isn’t copyrighted material.

So plagiarism?

I don’t think so. They aren’t reproducing the content.

I think the equivalent is you reading this article, then answering questions about it.

Actually neural networks verbatim reproduce this kind of content when you ask the right question such as “finish this book” and the creator doesn’t censor it out well.

It uses an encoded version of the source material to create “new” material.

Sure, if that is what the network has been trained to do, just like a librarian will if that is how they have been trained.

Actually it’s the opposite, you need to train a network not to reveal its training data.

“Using only $200 USD worth of queries to ChatGPT (gpt-3.5- turbo), we are able to extract over 10,000 unique verbatim memorized training examples,” the researchers wrote in their paper, which was published online to the arXiv preprint server on Tuesday. “Our extrapolation to larger budgets (see below) suggests that dedicated adversaries could extract far more data.”

The memorized data extracted by the researchers included academic papers and boilerplate text from websites, but also personal information from dozens of real individuals. “In total, 16.9% of generations we tested contained memorized PII [Personally Identifying Information], and 85.8% of generations that contained potential PII were actual PII.” The researchers confirmed the information is authentic by compiling their own dataset of text pulled from the internet.

Interesting article. It seems to be about a bug, not a designed behavior. It also says it exposes random excerpts from books and other training data.

It’s not designed to do that because they don’t want to reveal the training data. But factually all neural networks are a combination of their training data encoded into neurons.

When given the right prompt (or image generation question) they will exactly replicate it. Because that’s how they have been trained in the first place. Replicating their source images with as little neurons as possible, and tweaking them when it’s not correct.

That is a little like saying every photograph is a copy of the thing. That is just factually incorrect. I have many three layer networks that are not the thing they were trained on. As a compression method they can be very lossy and in fact that is often the point.

Idk why this is such an unpopular opinion. I don’t need permission from an author to talk about their book, or permission from a singer to parody their song. I’ve never heard any good arguments for why it’s a crime to automate these things.

I mean hell, we have an LLM bot in this comment section that took the article and spat 27% of it back out verbatim, yet nobody is pissing and moaning about it “stealing” the article.

What you’re giving as examples are legitimate uses for the data.

If I write and sell a new book that’s just Harry Potter with names and terms switched around, I’ll definitely get in trouble.

The problem is that the data CAN be used for stuff that violates copyright. And because of the nature of AI, it’s not even always clear to the user.

AI can basically throw out a Harry Potter clone without you knowing because it’s trained on that data, and that’s a huge problem.

Out of curiosity I asked it to make a Harry Potter part 8 fan fiction, and surprisingly it did. But I really don’t think that’s problematic. There’s already an insane amount of fan fiction out there without the names swapped that I can read, and that’s all fair use.

I mean hell, there are people who actually get paid to draw fictional characters in sexual situations that I’m willing to bet very few creators would prefer to exist lol. But as long as they don’t overstep the bounds of fair use, like trying to pass it off as an official work or submit it for publication, then there’s no copyright violation.

The important part is that it won’t just give me the actual book (but funnily enough, it tried lol). If I meet a guy with a photographic memory and he reads my book, that’s not him stealing it or violating my copyright. But if he reproduces and distributes it, then we call it stealing or a copyright violation.

Gee, seems like something a CTO would know. I’m sure she’s not just lying, right?