Ok let’s give a little bit of context. I will turn 40 yo in a couple of months and I’m a c++ software developer for more than 18 years. I enjoy to code, I enjoy to write “good” code, readable and so.

However since a few months, I become really afraid of the future of the job I like with the progress of artificial intelligence. Very often I don’t sleep at night because of this.

I fear that my job, while not completely disappearing, become a very boring job consisting in debugging code generated automatically, or that the job disappear.

For now, I’m not using AI, I have a few colleagues that do it but I do not want to because one, it remove a part of the coding I like and two I have the feeling that using it is cutting the branch I’m sit on, if you see what I mean. I fear that in a near future, ppl not using it will be fired because seen by the management as less productive…

Am I the only one feeling this way? I have the feeling all tech people are enthusiastic about AI.

If you are, it should be due to working for the wrong people. Those that don’t understand what’s what and only seek profit religiously.

Thanks for the readable code though.

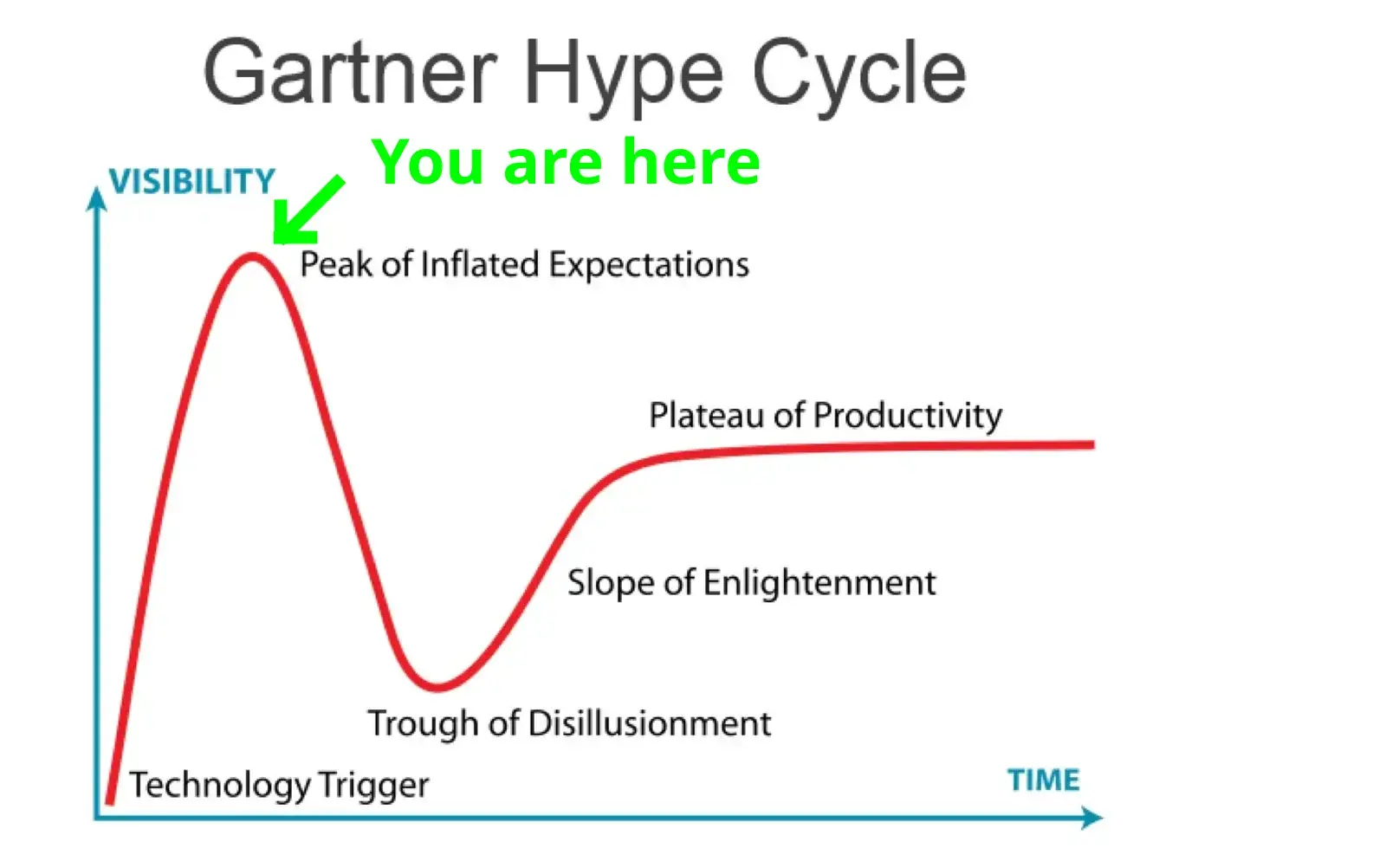

There’s a massive amount of hype right now, much like everything was blockchains for a while.

AI/ML is not able to replace a programmer, especially not a senior engineer. Right now I’d advise you do your job well and hang tight for a couple of years to see how things shake out.

(me = ~50 years old DevOps person)

Great advice. I would add to it just to learn leveraging those tools effectively. They are great productivity boost. Another side effect once they become popular is that some skills that we already have will be harder to learn so they might be in higher demand.

Anyway, make sure you put aside enough money to not have to worry about such things 😃

I’m only on my very first year of DevOps, and already I have five years worth of AI giving me hilarious, sad and ruinous answers regarding the field.

I needed proper knowledge of Ansible ONCE so far, and it managed to lie about Ansible to me TWICE. AI is many things, but an expert system it is not.

He has a good point. Specifying precisely what the program does is the actual difficult part and won’t be done properly by this current LLM system since it’s creating something new and requires actual thought and understanding.

Here is an alternative Piped link(s):

Uncle Bob’s response when asked if AI will takeover software engineering job.(1m)

Piped is a privacy-respecting open-source alternative frontend to YouTube.

I’m open-source; check me out at GitHub.

You’re certainly not the only software developer worried about this. Many people across many fields are losing sleep thinking that machine learning is coming for their jobs. Realistically automation is going to eliminate the need for a ton of labor in the coming decades and software is included in that.

However, I am quite skeptical that neural nets are going to be reading and writing meaningful code at large scales in the near future. If they did we would have much bigger fish to fry because that’s the type of thing that could very well lead to the singularity.

I think you should spend more time using AI programming tools. That would let you see how primitive they really are in their current state and learn how to leverage them for yourself. It’s reasonable to be concerned that employees will need to use these tools in the near future. That’s because these are new, useful tools and software developers are generally expected to use all tooling that improves their productivity.

If they did we would have much bigger fish to fry because that’s the type of thing that could very well lead to the singularity.

Bingo

I won’t say it won’t happen soon. And it seems fairly likely to happen at some point. But at that point, so much of the world will have changed because of the other impacts of having AI, as it was developing to be able to automate thousands of things that are easier than programming, that “will I still have my programming job” may well not be the most pressing issue.

For the short term, the primary concern is programmers who can work much faster with AI replacing those that can’t. SOCIAL DARWINISM FIGHT LET’S GO

I think you should spend more time using AI programming tools. That would let you see how primitive they really are in their current state and learn how to leverage them for yourself.

I agree, sosodev. I think it would be wise to at least be aware of modern A.I.'s current capabilities and inadequacies, because honestly, you gotta know what you’re dealing with.

If you ignore and avoid A.I. outright, every new iteration will come as a complete surprise, leaving you demoralized and feeling like shit. More importantly, there will be less time for you to adapt because you’ve been ignoring it when you could’ve been observing and planning. A.I. currently does not have that advantage, OP. You do.

I think all jobs that are pure mental labor are under threat to a certain extent from AI.

It’s not really certain when real AGI is going to start to become real, but it certainly seems possible that it’ll be real soon, and if you can pay $20/month to replace a six figure software developer then a lot of people are in trouble yes. Like a lot of other revolutions like this that have happened, not all of it will be “AI replaces engineer”; some of it will be “engineer who can work with the AI and complement it to be produtive will replace engineer who can’t.”

Of course that’s cold comfort once it reaches the point that AI can do it all. If it makes you feel any better, real engineering is much more difficult than a lot of other pure-mental-labor jobs. It’ll probably be one of the last to fall, after marketing, accounting, law, business strategy, and a ton of other white-collar jobs. The world will change a lot. Again, I’m not saying this will happen real soon. But it certainly could.

I think we’re right up against the cold reality that a lot of the systems that currently run the world don’t really care if people are taken care of and have what they need in order to live. A lot of people who aren’t blessed with education and the right setup in life have been struggling really badly for quite a long time no matter how hard they work. People like you and me who made it well into adulthood just being able to go to work and that be enough to be okay are, relatively speaking, lucky in the modern world.

I would say you’re right to be concerned about this stuff. I think starting to agitate for a better, more just world for all concerned is probably the best thing you can do about it. Trying to hold back the tide of change that’s coming doesn’t seem real doable without that part changing.

It’s not really certain when real AGI is going to start to become real, but it certainly seems possible that it’ll be real soon

What makes you say that? The entire field of AI has not made any progress towards AGI since its inception and if anything the pretty bad results from language models today seem to suggest that it is a long way off.

You would describe “recognizing handwritten digits some of the time” -> “GPT-4 and Midjourney” as no progress in the direction of AGI?

It hasn’t reached AGI or any reasonable facsimile yet, no. But up until a few years ago something like ChatGPT seemed completely impossible, and then a few big key breakthroughs happened, and now the impossible is possible. It seems by no means out of the question that a few more big breakthroughs could happen with AGI, especially with as much attention and effort is going into the field now.

It’s not that machine learning isn’t making progress, it’s just many people speculate that AGI will require a different way of looking at AI. Deep Learning, while powerful, doesn’t seem like it can be adapted to something that would resemble AGI.

You mean, it would take some sort of breakthrough?

(For what it’s worth, my guess about how it works is to generally agree with you in terms of real sentience – just that I think (a) neither one of us really knows that for sure (b) AGI doesn’t require sentience; a sufficiently capable fakery which still has limitations can still upend the world quite a bit).

Yes, and most likely more of a paradigm shift. The way deep learning models work is largely around static statistical models. The main issue here isn’t the statistical side, but the static nature. For AGI this is a significant hurdle because as the world evolves, or simply these models run into new circumstances, the models will fail.

Its largely the reason why autonomous vehicles have sorta hit a standstill. It’s the last 1% (what if an intersection is out, what if the road is poorly maintained, etc.) that are so hard for these models as they require “thought” and not just input/output.

LLMs have shown that large quantities of data seem to approach some sort of generalized knowledge, but researchers don’t necessarily agree on that https://arxiv.org/abs/2206.07682. So if we can’t get to more emergent abilities, it’s unlikely AGI is on the way. But as you said, combining and interweaving these systems may get something close.

Nobody knows if and when programming will be automated in a meaningful way. But once we have the tech to do it, we can automate pretty much all work. So I think this will not be a problem for programmers until it’s a problem for everyone.

Betteridge’s law of headlines: No.

Kind of nice to see NFTs breaking through the floor at the trough of disillusionment, never to return.

Currently at the crossroads between trough of disillusionment and slope of enlightenment

The trough of disillusionment is my favorite.

If your job truly is in danger, then not touching AI tools isn’t going to change that. The best you can do for yourself is to explore what these tools can do for you and figure out if they can help you become more productive so that you’re not first on the chopping block. Maybe in doing so, you’ll find other aspects of programming that you enjoy just as much and don’t yet get automated away with these tools. Or maybe you’ll find that they’ll not all they’re hyped up to be and ease your worry.

As an example:

Salesforce has been trying to replace developers with “easy to use tools” for a decade now.

They’re no closer than when they started. Yes the new, improved flow builder and omni studio look great initially for the simple little preplanned demos they make. But theyre very slow, unsafe to use and generally are impossible to debug.

As an example: a common use case is: sales guy wants to create an opportunity with a product. They go on how omni studio let’s an admin create a set of independently loading pages that let them:

• create the opportunity record, associating it with an existing account number.

• add a selection of products to it.But what if the account number doesn’t exist? It fails. It can’t create the account for you, nor prompt you to do it in a modal. The opportunity page only works with the opportunity object.

Also, if the user tries to go back, it doesn’t allow them to delete products already added to the opportunity.

Once we get actual AIs that can do context and planning, then our field is in danger. But so long as we’re going down the glorified chatbot route, that’s not in danger.

I’m both unenthusiastic about A.I. and unafraid of it.

Programming is a lot more than writing code. A programmer needs to setup a reliable deployment pipeline, or write a secure web-facing interface, or make a useable and accessible user interface, or correctly configure logging, or identity and access, or a million other nuanced, pain-in-the-ass tasks. I’ve heard some programmers occasionally decrypt what the hell the client actually wanted, but I think that’s a myth.

The history of automation is somebody finds a shortcut - we all embrace it - we all discover it doesn’t really work - someone works their ass off on a real solution - we all pay a premium for it - a bunch of us collaborate on an open shared solution - we all migrate and focus more on one of the 10,000 other remaining pain-in-the-ass challenges.

A.I. will get better, but it isn’t going to be a serious viable replacement for any of the real work in programming for a very long time. Once it is, Murphy’s law and history teaches us that there’ll be plenty of problems it still sucks at.

Man, it’s a tool. It will change things for us, it is very powerful; but still a tool. It does not “know” anything, there’s no true intelligence in the things we now call “AI”. For now, is really useful as a rubber duck, it can make interesting suggestions, make you explore big code bases faster, and even be useful for creating boilerplate. But the code it generates usually is not very trustworthy and have lower quality.

The reality is not that we will lose our jobs to it, but that companies will expect more productivity from us using these tools. I recommend you to try ChatGPT (the best in class for now), and try to understand it’s strengths and limitations.

Remember: this is just an autocomplete on steroids, that do more the the regular version, but that get the same type of errors.

Your job is automating electrons, and now some automated electrons are threatening your job.

I have to imagine this is similar to how farmers felt when large-scale machinery became widely available.

Huge need mbers of people migrated to cities to find work when this happened. Super 👍 interesting part of history!

As an example:

Salesforce has been trying to replace developers with “easy to use tools” for a decade now.

They’re no closer than when they started. Yes the new, improved flow builder and omni studio look great initially for the simple little preplanned demos they make. But theyre very slow, unsafe to use and generally are impossible to debug.

As an example: a common use case is: sales guy wants to create an opportunity with a product. They go on how omni studio let’s an admin create a set of independently loading pages that let them:

• create the opportunity record, associating it with an existing account number.

• add a selection of products to it.But what if the account number doesn’t exist? It fails. It can’t create the account for you, nor prompt you to do it in a modal. The opportunity page only works with the opportunity object.

Also, if the user tries to go back, it doesn’t allow them to delete products already added to the opportunity.

Once we get actual AIs that can do context and planning, then our field is in danger. But so long as we’re going down the glorified chatbot route, that’s not in danger.

I don’t think you are disturbed by AI, but y Capitalism doing anything they can to pay you as little as possible. From a pure value* perspective assuming your niche skills in c++ are useful*, you have nothing to worry about. You should be paid the same regardless. But in our society, if you being replaced by someone “good enough”, will work for the business then yes you should be worried. But AI isn’t the thing you should be upset by.

*This is obviously subjective, but the existence of AI with you troubleshooting vs fully replacing you is out of scope here.

This is a real danger in a long term. If advancement of AI and robotics reaches a certain level, it can detach big portion of lower and middle classes from the societys flow of wealth and disrupt structures that have existed since the early industrial revolutions. Educated common man stops being an asset. Whole world becomes a banana republic where only Industry and government are needed and there is unpassable gap between common people and the uncaring elite.

White collar never should have been getting paid so much more than blue collar and I welcome seeing the Shift balance out, so everyone wants to eat the rich.

White collar never should have been getting paid so much more than blue collar

Actually I see that the other way around. Blue collar should have never been paid so much less than white collar.

Rich will have weapons and technology. I see 1984 + hunger games scenario more likely.

This is exactly what I see as the risk. However, the elites running industry are, on average, fucking idiots. So, we have been seeing frequent cases of them trying to replace people whose jobs they don’t understand, with technology that even leading scientists don’t fully understand, in order to keep those wages for themselves, all in-spite of those who do understand the jobs saying that it is a bad idea.

Don’t underestimate the willingness of upper management to gamble on things and inflict the consequences of failure on the workforce. Nor their willingness to switch to a worse solution, not because it is better or even cheaper but because it means giving less to employees, if they think that they can get away with it.

Right. I agree that in our current society, AI is net-loss for most of us. There will be a few lucky ones that will almost certainly be paid more then they are now, but that will be at the cost of everyone else, and even they will certainly be paid less then the share-holders and executives. The end result is a much lower quality of life for basically everyone. Remember what the Luddites were actually protesting and you’ll see how AI is no different.

I’m in a similar place to you career-wise. Personally, I’m not concerned about becoming just a “debugger.” What I’m expecting this job to look like in a few years is to be more like "the same as now, except I’ve got a completely free team of “interns” that do all the menial stuff for me. Every human programmer will become a lead programmer, deciding what stuff our AIs do for us and putting it all together into the finished product.

Maybe a few years further along the AI assistants will be good enough to handle that stuff better than we do as well. At that point we stop being lead programmers and we all become programming directors.

So think of it like a promotion, perhaps.

Why would there be a team of AIs in this scenario under the human, instead of just one AI entity.

The role of the human is to tell the AI what it’s supposed to do. If you’re worried about AI that’s sophisticated enough to be completely self-directed then you’re worrying about AGI, which will be so world-changing that piddly little concerns such as “what about my job?” Are pretty trivial.

No, I meant keeping the human directing things but they could do it with one AI under them

Well yes, then. That’s what I said. You’d be a programmer who had free underlings doing whatever grunt work you directed them to.

Or are you questioning my use of the term “team” for the AIs? LLMs are specialized in various ways, you’d likely want to have multiple ones that handle different tasks.